Abstract

Task scheduling and resource management are critical for improving system performance and enhancing consumer satisfaction in distributed computing environments. The dynamic nature of tasks and the environments creates new challenges for schedulers. To solve this problem, researchers developed fuzzy-based scheduling algorithms. Fuzzy logic is ideal for decision-making processes since it has a low computational complexity and processing power requirement. Motivated by the extensive research efforts in the distributed computing and fuzzy applications, we present a review of high-quality articles related to fuzzy-based scheduling algorithms in grid, cloud, and fog published between 2005 and June 2023. This paper discusses and compares fuzzy-based scheduling schemes based on merits and demerits, evaluation techniques, simulation environments, and important parameters. We begin by introducing distributed environments, and scheduling process followed by their surveys. This study has summarized several domains where fuzzy logic is used in distributed systems. More specifically, the basic concepts of fuzzy inference system and motivations of fuzzy theory in scheduler are addressed smoothly. A fuzzy-based scheduling algorithm employs fuzzy logic in different ways (e.g., calculating fitness functions, assigning tasks to fog/cloud nodes, and clustering tasks or resources). Finally, open challenges and promising future directions in fuzzy-based scheduling are identified and discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Task scheduling is becoming increasingly important as more clients use distributed systems. It is critical to consider a number of critical factors when managing Information Technology (IT) systems, including availability, execution time, and reliability. As a result, it is difficult to construct a model that integrates all of these performance metrics due to factors that are unrelated (Chiang et al. 2023; Mangalampalli et al. 2023a). To develop effective scheduling algorithms, it is vital to understand the limitations and problems associated with various algorithms. In this paper, fuzzy-based task scheduling strategies and associated metrics are discussed in grid, cloud, and fog environments. The main contributions of this study are as follows:

-

Analyzing fuzzy logic applications in distributed systems and the advantages and disadvantages of fuzzy logic.

-

Presenting a comprehensive analysis of the fuzzy-based scheduling in grid, cloud, and fog computing.

-

Developing a taxonomy to categorize grid, cloud, and fog scheduling algorithms.

-

Comparing the existing fuzzy-based scheduling algorithms according to QoS criteria and their appropriate applications.

-

Investigating the details of fuzzy systems that are used in schedulers.

-

Analyzing the evaluation tools used to evaluate the different approaches.

-

Proposing the possible future research challenges and opening issues.

1.1 Related surveys

It is critical that cloud computing users select the best resources for their tasks, which requires examining a variety of factors that affect their performance. In distributed systems, NP-complete problems are crucial for task scheduling algorithms. Recently, several surveys have been published in the literature due to the importance of task scheduling in research. In 2014, Chopra and Singh (2014) classified hybrid cloud scheduling techniques based on their features and functions. Wadhonkar and Theng (2016) compared several cloud-based task scheduling algorithms. Meriam and Tabbane (2017) identified different job scheduling algorithms and their applications. Hazra et al. (2018a) assessed a range of scheduling algorithms that aim to reduce energy consumption in cloud infrastructure as part of a study conducted in 2018. In 2018, Naha et al. (2018) presented an overview of fog computing scheduling algorithms. This comparison compared algorithms that used available resources more efficiently.

Hazra et al. (2018b) compared cost parameters to task scheduling algorithms in 2018. In 2020, Alizadeh et al. (2020) provided an overview of cloud-fog task scheduling based on methods, tools, and restrictions. Ghafari et al. (2022) provided an in-depth review of scheduling algorithms. The authors examined energy consumption in relation to scheduling algorithms. Table 1 summarizes the main features and aims of existing surveys. Table 2 also compares our review with other reviews based on various issues. Except for one review, all task scheduling algorithms have failed to address QoS metrics. The authors did not mention a fuzzy-based scheduling algorithm. Research on task scheduling using fuzzy systems needs to be reviewed comprehensively to keep up with the latest developments.

The following requirements motivated this article:

-

Task-resource mapping algorithms are in great demand in distributed environments. The use of fuzzy-based approaches in distributed environments is crucial for managing uncertainty and dynamic behavior.

-

Identify the benefits and drawbacks of each algorithm under consideration and suggest optimal algorithms based on the conditions. Algorithm selection is crucial to ensuring that consumers and providers are satisfied.

-

Making a decision based on available data is possible with fuzzy logic.

-

Identify the challenges of fuzzy logic. Due to the inherent flexibility of fuzzy rules, the development and analysis of fuzzy rules is a complex process.

-

Choosing the best simulator depends on the specific scenario, for example implementing cost-aware task-to-resource mappings or the makespan approach.

-

Identifying open challenges and future research directions in this field is necessary.

1.2 Paper organization

Figure 1 illustrates the structure of the study succinctly.

This paper is structured as follows: Sect. 2 explains distributed systems, grid, cloud, and fog computing, scheduling, and fuzzy inference system. Section 3 describes the research methodology. Section 4 describes a taxonomy of fuzzy-based scheduling algorithms. Section 5 compares these algorithms. In Sect. 6, future research directions are discussed as well as some open issues. Section 7 presents the conclusion.

2 Background

This section presents a brief introduction to distributed systems. In the following, scheduling and fuzzy system are presented.

2.1 Distributed systems

In distributed systems, multiple components are spread across multiple machines and are also called distributed computers. Coordinating these devices creates a sense of cohesiveness for users. Distributed systems are used by computer science (CS) and information technology (IT) to manage complex tasks. However, traditional options lack the advantages offered by distributed systems. Throughout the last 60 years, these systems have evolved. Figure 2 shows the evolution process. Technology and social change have led to a new role for distributed systems. While modern distributed systems enable new markets and artistic expressions, they also present new research challenges to comprehend and improve their operation. Figure 2 illustrates the development process for distributed systems.

A timeline of the distributed systems (Lindsay et al. 2020)

2.2 Grid computing

Systems can process heavy calculations using a network-based computing model. The combination of various services and facilities enables us to create an advanced system capable of handling heavy computing tasks not handled by a single device alone (Asghari Alaie et al. 2023). Figure 3 shows the layers of grid computing:

-

User Interface: The interface simplifies users’ search of the network.

-

Security: This computing environment relies heavily on the Grid Security Infrastructure (GSI). Performing network operations requires OpenSSL.

-

Scheduler: Multiple specific tasks can be run simultaneously with the help of a scheduler.

-

Data Management: The management of data is one of the most crucial aspects of any system or network. Through Globus Toolkit, grids can access secondary storage in a secure, trusted way.

-

Workload & Resource Management: When running on a network, an application must be aware of network resources in order to launch work on them and check their status.

Fig. 3 The grid computing layers (Guo et al. 2022)

2.3 Cloud computing

Distributed systems commonly use cloud computing. Cloud computing makes it possible to create remote networks, service providers, storage facilities, and applications. Using resources that are configurable and common, this is possible. This model allows customers to easily scale IT services via the internet (Pradhan et al. 2022). Figure 4 illustrates the four layers of cloud computing. Infrastructure as a Service (IaaS) component is one of the most important aspects of cloud computing. In computing, renting an infrastructure means paying for only what you use (e.g., Microsoft Azure). In this layer, you can access hardware, software, networks, and storage. Platform as a Service (PaaS) provides resources for building applications. This layer includes tools for developing and managing databases. Software as a Service (SaaS) consists of a software solution that users can connect to via the Internet and rent from companies and organizations. As a result of cloud top layering, a company’s business functions are outsourced. Customer service, salary management, human resource management, and accounting are all part of these processes.

The cloud computing layers (Masdari et al. 2017)

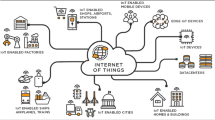

2.4 Fog computing

Cloud computing processes data more slowly than fog computing. There are nodes in the fog, which are devices (Kumari et al. 2022; Li et al. 2019). Nodes can also have Internet access, computing power, and storage as well as be any device with these capabilities. It is possible to use vehicles or workplaces as target points. Communication between IoT devices generates IoT data through a network (Javanmardi et al. 2021). The data is stored and processed by a cloud data transfer center. Fog computing environments are more geographically distributed and closer to end users, as shown in Table 3.

Fog computing is primarily used to reduce latency. Furthermore, this approach is capable of real-time analytics and functional reductions. It is generally considered that fog computing is the best solution for organizations that require quick data analysis and response. Several IoT applications can be implemented using this approach thanks to the high speed of information transfer and processing. Due to the large amount of data generated by IoT devices, it is time-consuming and expensive to transfer IoT data to data centers. It is greatly simplified to solve this problem with fog computing (Guerrero et al. 2022).

2.5 Scheduling process

Scheduling problems are typically NP-hard. Finding the right solution within a short and adequate period is sufficient. Most optimization problems require a variety of solutions (Mor et al. 2020).

Figure 5 shows a clear relationship between fog, grid, and cloud layers. Clouds are at the top, fogs are in the middle, and grids are at the bottom. Centralized systems consist of a central node that delegate tasks to other nodes. This node is called the primary node. In addition to the workers, there are also delegated nodes. Due to the fact that nodes may function as either primary or worker nodes simultaneously, primary/worker relationships are less clear in distributed systems. The primary nodes must communicate with each other in order for the system to succeed. Hierarchical distributed systems fall into the third category. In hierarchical distributed systems, predecessors and successors are not able to communicate. The scheduler’s scope can vary depending on the architecture.

The relationship between cloud, fog, and grid (Yu et al. 2022)

It is important that schedulers take into account other factors besides the architecture connectedness of primary, workers, and resources. Worker access to network and physical resources is restricted in a cloud computing environment. A scheduler on any operating system can perform the same functions as a scheduler in this program. It involves multiple stages to schedule tasks for primary workers. Global schedulers allow workers to be assigned all tasks. On both primary and worker nodes, local schedulers can schedule incoming and outgoing tasks. The organization of dynamic events is crucial to system efficiency. Typically, global schedulers allocate resources and tasks in centralized distributed systems (Houssein et al. 2021; Islam et al. 2021; Mohammad Hasani Zade et al. 2022). Figure 6 shows a comparison of fog, clouds, and grid.

Challenges associated with cloud, fog, and grid computing (Zahoor et al. 2018)

Different types of schedulers are available, including schedulers for resources, workflows, and tasks.

Resource scheduling: Virtual resources are shared among physical machines (PMs) and servers (Yuan et al. 2021). Prioritizing activities is determined by QoS variables. In on-demand scheduling, random tasks are scheduled rapidly with resources. Performance can degrade when multiple processes run on the same machine, and overprovisioning may result from uneven workload allocation. The under-provisioning of VMs can occur if they are held for a long time. As a result of over-provisioning and under-provisioning, service delivery costs increase. The provisioning algorithm must be able to efficiently assess and handle impending workloads. Managing resources in cloud computing is challenging due to heterogeneity, dynamicity, and dispersion (Murad et al. 2022).

Workflow scheduling: To develop an effective and comprehensive workflow management strategy, a variety of computationally complex programs must be used. Workflows are scheduled by executing interconnected tasks in a particular order (Dong et al. 2021). The number of task nodes in one workflow can be very large, especially when heterogeneous workflows are involved, resulting in many changes in parallel computing needs. It is impossible to solve the workflow scheduling problem because it is NP-complete (Javanmardi et al. 2023).

Task Scheduling: By using this method, tasks can be assigned to resources for implementation. Schedule tasks easier than workflows. In centralized task scheduling, one scheduler can schedule multiple tasks across multiple systems simultaneously. When tasks are scheduled centrally, it is hard to scale and tolerate failures. Task schedulers communicate by using a network (Raju and Mothku 2023). In the cloud system, all tasks sent by users are handled collectively by the schedulers. Consequently, several planners handle the workload, resulting in a considerable reduction in the cycle time (Pradeep et al. 2021). Figure 7 illustrates the scheduling of resources, tasks, and workflows.

There are two types of task scheduling processes: static and dynamic. Static scheduling algorithms are used to solve offline scheduling problems in distributed hard real-time systems (Verhoosel et al. 1991). Dynamic schedules are determined during execution based on information currently available, whereas static schedules are fixed before execution. Figure 8 shows the process of static and dynamic task scheduling.

An overview of static and dynamic task scheduling stages (Bansal et al. 2022)

The resource manager manages virtual machines uniformly and determines the speed of computing. Users can submit tasks to the cloud system. A task manager sends tasks to cloud systems, where they are batch processed and the task size is collected. A scheduler begins working once the resource manager and task manager send it information. Figure 9 shows an overview of the scheduling mechanism.

Scheduling mechanism structure (Pang et al. 2019)

Grid scheduling depends on resource allocation and coordination for efficient task execution. Grid services allow applications to create tasks for individuals or Virtual Organizations (VOs). Increasing cloud adoption drives enterprises to adopt cloud computing solutions. Virtualized computing resources can be accessed via cloud computing services without technical knowledge. Table 4 compares grid computing and cloud computing.

Green computing and utility computing have introduced new optimization objectives and variables that could be used in cloud computing infrastructures (Bharany et al. 2022; Kaplan 2005). Several scheduling algorithms were proposed in the early days of grid computing, and many of them were adapted for distributed computing as a result. Various virtualized resources, user demands, workloads in data centers, and changes in resource capacity can all contribute to a cloud having dynamic properties. It becomes increasingly important to schedule tasks as the number of cloud clients grows. It becomes more agile by dynamically allocating and de-allocating a large number of resources. Cloud computing scales up and down as computing resources are added or subtracted. In addition to automatic scaling, dynamic routing, function as a service (FaaS), and resource allocation as a service (RaaS), dynamic cloud services can be used in many ways. Within the past decade, the scheduling literature has grown exponentially as a result of the introduction of new optimization objectives. The field expanded in a way that made assessing new findings difficult. Scheduling tasks in a cloud environment is therefore different from scheduling in a traditional environment.

Fog computing enables cloud computing platforms to handle IoT applications at the edge of networks. The proximity of computing resources to IoT devices makes fog computing a fast way to process IoT requests. The provisioning and management of fog computing resources are among the greatest challenges. Fog computing resources are limited by their capacity limitations, their dynamic nature, heterogeneity, and their distributed nature. It is critical to provide and manage resources efficiently in fog computing in order to implement IoT applications efficiently. Cloud computing provides IoT devices with computing resources that can be accessed quickly and efficiently, allowing for faster processing of IoT requests (Mokni et al. 2023). It is possible to process IoT applications using fog computing, but provisioning and managing fog resources are challenging. It’s difficult to estimate the capacity of fog computing resources since they’re dynamic, heterogeneous, and distributed. It is essential to understand fog computing resource provisioning and management so that IoT applications can take full advantage of fog computing’s capabilities.

2.6 Fuzzy inference system (FIS)

Fuzzy logic (FL) was developed by Lotfi Zadeh (1965). The degree of membership in a universe is usually indicated by a real number between 0 and 1. Fuzzy degrees of truth logic emerge as propositions are assigned truth degrees. It is the real unit interval that gives the typical set of truth-values, corresponding to 0 for “totally false”, 1 for “totally true”, and the other values for partial truth. Fuzzy logic involves modeling logical reasoning by modeling imprecise or vague statements. Logic uses truth values to describe degrees of truth, and this is a category of logic with many values. Fuzzy rules are used to adjust the effects of certain factors in fuzzy control systems. A fuzzy rule-based system typically replaces or substitutes skilled human operators (Mehranzadeh and Hashemi 2013; Nanjappan et al. 2021; Javanmardi et al. 2020; Kumaresan et al. 2023). An inference engine that uses fuzzy rules can be used by a scheduler to make online decisions that maximize system performance based on current network conditions.

FIS is based on a simple concept. These stages include input, processing, and output. Membership functions are created from inputs at the input stage. As a result of the processing stage, each appropriate rule is invoked and a corresponding result is generated. After that, it combines the results. At the end of the process, an output value is produced based on the combined results (Fahmy 2010). A fuzzy system is commonly used in situations where several factors cannot be accurately predicted or known in advance. Fuzzy logic controllers have the advantage of requiring less mathematical modeling than conventional control strategies. Further, fuzzy inferences are suitable for a variety of engineering applications since they map real variables to nonlinear functions. Additionally, fuzzy logic is easy to implement, as it allows for great flexibility in workflow scheduling, for example, where rigor is not required.

A fuzzy logic controller adjusts the parameters of a controlled system based on its current state. There are several steps involved in the design of fuzzy control systems. Inputs and controls need to be identified first. It is necessary to quantify each variable. Afterwards, each quantification is assigned a membership function. Fuzzy rule bases are developed based on input conditions that determine what controls should be implemented. Formulas based on implication are used to evaluate rule bases. Fuzzy outputs can be created by aggregating the results of the rules. The rules of computer computation are much stricter than those of fuzzy theory. The fuzzy theory is useful for a wide range of control problems. It is fundamentally strong since it does not require exact input. In fuzzy theory, knowledge is examined using fuzzy sets. Language expressions such as “Low”, “Medium”, and “High” can be used to show tease sets. Figure 10 illustrates the construction process in four steps.

Fuzzy set theory is useful for describing imprecise or vague data. The fuzzy logic-based approach is beneficial for solving online and real-time problems because it has a low computational complexity. Mamdani systems and Sugeno systems are supported by Fuzzy Logic Toolbox software (https://www.mathworks.com/help/fuzzy/types-of-fuzzy-inference-systems.html). The Mamdani’s method is usually recognized as a method for containing professional knowledge (Singla 2015; Machesa et al. 2023).

The knowledge can be illustrated more human-like to facilitate better understanding. Fuzzy inference of the Mamdani type requires substantial computing power. For dynamic nonlinear systems, the Sugeno method is incredibly efficient for optimization and adaptive control. The Sugeno method of fuzzy inference was introduced by Takagi and Sugeno in 1985. The fuzzy system can be adapted to model the data more effectively with these adaptive techniques. FISs based on Mamdani and Sugeno methods differ primarily in how crisp outcomes are generated. With a mamdani-type FIS, crispy outcomes are calculated by defuzzing fuzzy outcomes, while with a Sugeno-type FIS, crisp outcomes are calculated by weighing fuzzy outcomes (Kaur and Kaur 2012). The Sugeno FIS does not interpret Mamdani results when their outcome is not fuzzy (Haman and Geogranas 2008). Despite the reinstatement of defuzzification, Sugeno’s weighted average improved processing speed. In decision-support applications, Mamdani-type FISs are commonly used because of their interpretability and perception. As opposed to Sugeno-type FISs, Mamdani-type FISs use a different membership function. As Mamdani FISs cannot be integrated with adaptive neurofuzzy inference systems, their design flexibility is limited. Figure 11 illustrates Mamdani and Sugeno models.

In Figs. 12 and 13, fuzzy logic is discussed in terms of its advantages and disadvantages. Figure 14 shows how fuzzy logic can be classified into functional fields. In distributed systems (e.g., cloud computing and fog computing), fuzzy logic is often combined with other models to optimize resource usage, balance load, schedule, etc. Table 5 summarizes various fuzzy logic methods. In distributed computing, fuzzy theory methods can be used in different categories (e.g., task and resource management, trust management, healthcare service, and etc.). This paper focuses on task scheduling, since most studies focus on resource management.

The technology behind resource scheduling is expected to meet users’ demands today. It is common for users to lack the skill to accurately submit their CPU, bandwidth, and storage requirements, but their task attitude can contribute to fuzzy requirements. A cloud computing friendly system aims to meet users’ imprecise needs, and senses these needs in order to satisfy them. Additionally, it is an important standard for quality of service (QoS) in computing environments. Through virtualization, CPU, memory, and GPU resources can be shared by multiple users simultaneously. Resource scheduling is necessary for considering the overall allocation of resources. Resource allocation must also maximize the benefits of all resources. Several characteristics can be used to schedule cloud computing resources so that they meet users’ needs. User resource needs can be predicted due to the long-term nature of cloud computing demand. As a result, fuzzy logic theory can be applied to resource scheduling to solve the extra difficulty caused by imprecise user needs. The combination of fuzzy logic and logical reasoning can make cloud computing systems human-friendly. Dynamic scheduling of resources can be optimized by using feedback control. Dynamically adjusting the configuration can optimize the performance of a resource according to the user’s needs and the resource’s current performance (Chen et al. 2015). In addition, a fuzzy set can also be used to prioritize queued tasks using fuzzy rules (Tsihrintzis and Virvou 2021; Pezeshki and Mazinani 2019; Talpur et al. 2022). Guiffrida and Nagi (1998) summarized four of the most important reasons why fuzzy set theory is relevant to scheduling in Fig. 15.

3 Research methodology

This section provides an overview of fuzzy-based task scheduling methodologies through a Systematic Literature Review (SLR). For gathering task scheduling algorithms, SLR generally includes research guidelines and detailed literature reviews. Figure 16 illustrates several steps involved in research methodologies.

3.1 Data selection source

A thorough search was conducted in three famous scientific databases (i.e., ScienceDirect, Springer Link, and IEEE Xplore) for sufficient and recent information about fuzzy-based scheduling algorithms. Table 6 lists electronic databases as sources of data selection. Different papers between 2005 and 2023 are downloaded in order to conduct this research.

3.2 Study selection procedure

Research on this topic began in March 2022. In every research process, relevant papers must be found. Researchers use keywords related to the research topic to identify and find relevant papers and to filter out those that are unrelated, which greatly influences the content of published papers. A comprehensive search of scientific journals and conferences was conducted to find research papers regarding task scheduling in cloud, fog, and grid environments (see Sect. 3.1). To conduct the search, fuzzy, scheduling, task scheduling, fuzzy logic, cloud, grid, and fog are used. In Fig. 17, we reviewed the selected databases (i.e., IEEE, Springer, and Elsevier) in order to conduct our research. Research papers were collected based on the keywords.

4 Research questions and their motivations

In this study, fuzzy-based scheduling algorithms and techniques are explored in cloud, fog, and grid environments. Table 7 summarizes the different research questions generated by our study. It is imperative that researchers understand the limitations, issues, and challenges associated with existing scheduling algorithms in order to develop effective algorithms.

Researchers will gain a better understanding of fuzzy-based scheduling in distributed systems by answering the above research questions. Table 7 shows an answer to each research question in each section.

5 Review of fuzzy-based scheduling algorithms

This section introduces 35 fuzzy logic-based scheduling algorithms for cloud, fog, and grid systems. This collection includes papers compiled from a variety of peer-reviewed sources. These papers examine fuzzy-based scheduling algorithms for clouds, fogs, and grids between 2005 and 2023. For the last 18 years, we calculated the number of publications per year to visualize research evolution. We divide fuzzy-based scheduling algorithms into three categories: (i) fuzzy-based scheduling algorithms in the cloud environment (ii) fuzzy-based scheduling algorithms in the fog environment and (iii) fuzzy-based scheduling algorithms in the grid environment.

Figure 18 shows that cloud computing was used in 43% of papers, grid computing in 34%, and fog computing in 23%. Cloud computing is a system that communicates via remote servers and hardware. There are two types of processors: servers and local processors. The fog technology allows data to be offloaded to the cloud more efficiently while storing, processing, and analyzing it. Fog computing differs from cloud computing in that it is distributed and decentralized. Fog network provides information as close to the source as possible as a complement to cloud computing. There are many similarities between cloud and fog concepts. Although cloud computing differs from fog computing in some important ways, there are some similarities as well.

Figure 19 shows a visualization of keywords. This chart can be used to learn more about the most important keywords for this topic. Depending on the frequency, each word has a different size.

5.1 Fuzzy-based scheduling algorithms for cloud

Xiaojun et al. (2015) classified cloud computing resources according to Resource Scheduling Based on Fuzzy Clustering Algorithm (CBFCM—Cost-based Fuzzy Clustering Algorithm). This study analyzes four scheduling algorithms, as well as the resulting resource scheduling scheme. A virtual machine's resources are monopolized by each task. It is desirable to schedule job tasks as parallel as possible, in order to avoid task blocking, and to minimize the amount of time it takes to run the job. There are many resources available for computing, including networks, servers, storage, applications, and services. This approach allocates cloud computing resources at the user level using a parallel labor cost performance model, and platform-level resources using fuzzy clustering. The results indicated that the algorithm's number of iterations and classification accuracy are reasonable.

Zhu et al. (2020) proposed Fuzzy Dynamic Event Scheduling (FDES) for scheduling periodic multistage jobs. It uses two metrics (i.e., cost and satisfaction). A high degree of satisfaction is achieved by combining the VM Availability Maintenance (VMAM), Task Collection Strategies (TCS), task deadline assignment (TDA), and Fuzzy Task Scheduling (FTS). Virtual machines in virtual clusters can be maintained through time slot maintenance. To determine which tasks should be scheduled, two TCS proposals have been proposed: (1) The TCS1 proposes only to schedule tasks that have arrived since the last scheduling period. (2) TCS2 replaces unfinished tasks from the previous time window with just arrived ones. It performs two main operations: task prioritization and task allocation. The priority of ready tasks is assigned during task prioritization, and the fuzzy priority is assigned during task allocation. A fuzzy minimum heap will be used to prioritize ready tasks. Triangular fuzzy numbers represent fuzzy temporal parameters. In addition to being completely reactive, dynamic scheduling considers three aspects: (1) jobs arrive at unpredictability; (2) data transmission times are uncertain between any two consecutive tasks until completion of data transmission; and (3) the processing time for a task is uncertain until it has been completed. A comparison of FDES to DES and Heterogeneous Earliest Finish Time (HEFT) algorithms indicates that it performs more efficiently and robustly than other algorithms.

Using fuzzy quotient space theory, Qi and Li (2012) presented a cloud job scheduling algorithm. Resources can be scheduled by abstracting computing attributes into virtual machines. To assign weights to virtual machine attributes, minimum variance mean theory is used. Consequently, multi-attribute information is granulated based on user QoS requirements. Three indices are used to describe the problems (X, f, T), where X denotes the conceptual set, f (.) denotes the attribute of X, and the structure of X and the relationships between its elements are represented by T. The first step is to coarsen the granulation space, construct candidate collections, and meet task execution requirements. QoS factors are used to classify tasks and fuzzy quotients are used to define distance functions. Experiments indicated that the algorithm performs user tasks efficiently and effectively.

According to Farid et al. (2020), FR-MOS is a new method for scheduling scientific workflows that combines Fuzzy Resource Utilization and Multi-Objective Scheduling (MSO). The provider offers several machines to users. PSO schedules a real scientific workflow in a multi-cloud environment. It is important to minimize costs and time as much as possible. Four QoS requirements are considered in the FR-MOS algorithm, including the makespan, the cost, the reliability, and the resource utilization. Users need to create an application structure before starting a workflow application. Cloud platforms should support VM types compatible with workflows. Cloud providers assign tasks to task queues, and tasks are handled based on workflow structures. The cloud provides a limitless number of virtual resources, but task sequencing must depend on each other since tasks can be queued in parallel. In the FR-MOS algorithm, the reliability coefficient ρ is determined by fuzzy logic.

Guo (2021) proposed a fuzzy self-defense algorithm based on multi-objective task scheduling. Furthermore, the load balancing algorithm considers the customer's waiting time and task costs. It is necessary to schedule multi-objective tasks in cloud computing in order to minimize waiting times for customers. Furthermore, multi-objective task scheduling considers the cost of multi-objective task completion as well as resource load balance. By using this method, schedule completion times can be reduced, improving schedule efficiency. According to the estimates, 95% of the resources were utilized, which is a reasonable rate.

Mansouri et al. (2019) proposed a hybrid task scheduling algorithm named Fuzzy Modified Particle Swarm Optimization (FMPSO) that is based on the fuzzy system. FMPSO incorporates speed updates, crossovers, and mutations along with roulette wheels. The SJFP method starts with the construction of a population, followed by the calculation of velocity matrices, position matrices, crossover operators, and mutation operators. Scheduling strategies are based on three common assumptions. In the first place, all tasks are independent. A user submits n tasks for execution across m virtual machines to minimize execution time and to optimize resource management. Virtual machines are scheduled in first-come-first-served order if there are more tasks than virtual machines. Virtual machines are assigned tasks according to the proposed scheduling strategy (FMPSO). Transferring tasks between virtual machines is not possible. It is not allowed to assign one task to multiple virtual machines at the same time. Fuzzy inference considers inputs such as task lengths, CPU speeds, RAM sizes, and execution times when calculating fitness. POS is further optimized through crossovers and mutations. In the experiment, FMPSO used resources more efficiently.

Zhou et al. (2018) proposed a method for workflow scheduling in Infrastructure as a Service (IaaS) called Fuzzy Dominance Sort based Heterogeneous Earliest-Finish-Time (FDHEFT). An algorithm based on HEFT (Heterogeneous Earliest-Finish-Time) scheduling lists and fuzzy storing is proposed. It begins with an overview of HEFT and HEFT-based algorithms, followed by a description of fuzzy dominance sort, and ends with a discussion of FDHEFT. The HEFT method uses a list-based heuristic to prioritize tasks and select instances. Priority-based selection is performed by HEFT according to the priorities assigned to each task. Each selected task is assigned to its best instance based on its priority and minimizes completion time by selecting tasks in order of priority. Multi-objective performance is measured using fuzzy dominance sorts. For multi-objective problems, the FDHEFT allows them to explore high quality tradeoff solutions and achieve fast convergence. The task prioritization process consists of two phases: task selection and instance selection. The higher rank tasks in a workflow are prioritized based on FDHEFT's upward sorting by non-increasing rank values. By using fuzzy dominance values, FDHEFT sorts and selects the best K schedule solutions in the instance selection phase. Workflows in IaaS clouds should be more cost-effective and faster. The resource parameters and pricing of Amazon EC2 facilitated FDHEFT's cost effectiveness and time reduction.

Samriya and Kumar (2020) presented an optimal Service Level Agreement (SLA) based task scheduling by hybrid fuzzy TOPSIS-PSO (Particle Swarm Optimization). PSO-based algorithms optimize available tasks and VMs. In cloud computing, task scheduling is a challenging issue due to the large number of users. This paper presents an algorithm for scheduling tasks to solve these problems. Fuzzy TOPSIS and PSO algorithms are used in this study in order to plan productively the task in cloud. This study seeks to reduce energy consumption and migration costs. A multi-objective function is achieved by hybridizing PSO with Fuzzy TOPSIS. PSO optimizes the number of virtual machines and the available tasks at the beginning. As an objective function, Fuzzy TOPSIS uses the weighted sum of energy, cost and execution time to solve the multi-objective task scheduling problem. With the Fuzzy-TOPSIS algorithm, the task scheduling problem is solved for cloud-based SLA-based tasks by estimating the cost, energy weight, and execution time of each task. Experimental results showed that the proposed algorithm meets the SLAs, facilities, and QoS requirements.

Wang et al. (2022) proposed that a task scheduling framework based on locality, deadlines, and resource utilization is an energy-efficient one. Tasks are scheduled to rack- and cluster-local servers, as well as remote servers, in order to achieve high levels of data locality. A cluster slot is updated once tasks and slots are assigned. A fuzzy logic algorithm optimizes server CPU, memory, and bandwidth utilization. Fuzzy logic dynamically configures CPU, memory, and bandwidth on each server. The Jiangsu Institute of Public Security Science and Technology provides the data necessary to construct three membership functions. It is possible to derive fuzzy rules using practical operators. The calculation of dynamic server slots is possible using fuzzy logic constructs based on practical data. Each job consists of several independent tasks and is completed at time 0. Servers are shared among clusters for MapReduce jobs. Each job has an assigned number of servers. Job processing requires the use of physical servers. Using MapReduce clusters with variable number of slots, energy consumption was reduced while staying within deadline constraints (U - T1). Based on experiments, the proposed task scheduling significantly reduced energy consumption.

Shojafar et al. (2014) presented a hybrid approach that is based on fuzzy theory and a Genetic Algorithm (GA), which is called FUGE. FUGE algorithm assigns tasks based on the performance of virtual machines and memory. FUGE enhances GA using fuzzy theory. Two types of chromosomes are proportional evaluated using QoS parameters. First-generation output chromosomes undergo a modified fuzzy operation before being added to the population. One of the best chromosomes from the first generation is called the output chromosome. These criteria serve as input parameters for fuzzy systems. There is no limit on the number of chromosomes each type should have, but they should have the same number for both types of chromosomes. Each chromosome of each type is then allocated computational resources (standard random) in order to calculate the fitness value. For every gene (job), fitness function values are added to calculate chromosome fitness functions. The fitness value of each chromosome determines which is selected. Summing the fitness function values of chromosomes' genes yields the fitness function values for those chromosomes. The algorithm generates a new chromosome based on the overlapped chromosomes (of different types) and performs the crossover operation on those selected chromosomes. The best chromosome of the first generation (i.e., the best chromosome of the first generation) is created after completing this step. The first generation is regenerated after adding this chromosome back. Recursively repeating the entire process for the new population is referred to as the recursive algorithm. Simulations by FUGE showed it was possible to achieve an effective load balance at an affordable cost.

By applying the fuzzy dominance mechanism, Rani and Garg (2021) improved the application's reliability while reducing energy consumption, using the Reliable Green Workflow Scheduling (FDRGS) algorithm. Fuzzy dominance sorting machines determine the optimal trade-off solution based on the fuzzy dominance values. There are two phases to the algorithm. The first step in creating a workflow application is assigning a rank to each task. Consequently, the tasks ranked highest within the workflow application are given priority. Secondly, each task is assigned to the appropriate processor. They sort the trade-off solutions using fuzzy dominance. It determines the best compromise by comparing trade-off solutions. In addition to optimizing energy consumption, dynamic voltage and frequency scaling (DVFS) is used to select a specific processor frequency level to ensure task reliability. A further benefit of the DVFS approach was that it could save energy. An analysis of the performance of FDRGS is conducted compared to the performance of ECS (Energy-Conscious Scheduling), HEFT, and RHEFT (Reliable-HEFT) algorithms. In terms of energy usage and reliability, the proposed algorithm performed better.

Gholami Shooli and Javidi (2020) proposed a Gravitational Search Algorithm (GSA) with a fuzzy system for resource allocation and task scheduling. It is also possible to solve nonlinear problems using GSA. The four main forces of nature can be divided into four categories. In addition to gravity, weak forces and electromagnetic forces also exist. In the universe, three forces act, but gravity is the strongest. GSAs optimize discrete time artificial systems using gravitational laws and motions. The first step involves determining the system space. In the problem space, there is a multidimensional coordinate system. The problem can be solved by any point in space. Masses make up the search agents. Generally, a mass can be described in terms of its position, gravity mass, inertia mass, and inertia mass. There are several factors that determine a mass's gravitational and inertial masses, including its fitness. The rules of the system are established after the system is formed. In this assumption, only gravitational laws and motion laws will be established. To achieve the solution, a random position is assigned to each mass in the algorithm. Every time a mass is evaluated, its position is determined. Furthermore, the mass of gravity, the mass of inertia, and the Newton gravity constant are updated continuously. Repeatability is a good indicator of the stop condition. When GSA calculates the number of masses, it uses a fuzzy system. It minimizes makespan, flow time, and load imbalance during task assignment. In experiments, the approach algorithm seems to efficiently allocate resources, balance loads, and save time.

In Soma et al. (2022), Soma et al. proposed a Pareto-based Non-dominating Sorting PSO (NSPSO) method for optimizing multi-objective problems. Users can select a dynamic configuration based on their needs. It is possible to make sense of uncertain situations using fuzzy logic. Statistically, non-dominated solutions are likely to be more prevalent in large-scale scientific applications; memory usage is another objective when determining the quality of a solution. Moreover, fuzzy rules simplify the process of classifying solutions, allowing users to make quicker decisions. It is possible to select the best resource pair and task pair based on user preferences with the NSPSO fitness function when fuzzy based decision selection is incorporated. This system aims to accomplish the following: (1) Optimizing workflow tasks with a multi-objective approach to minimize energy and time consumption. (2) The above problem can be solved by using NSPSO meta-heuristics based on Pareto and Fuzzy Rules (F-NSPSO). (3) Compared to Simple DVFS, NSPSO, and other algorithms, the F-NSPSO algorithm performs better. F-NSPSO performed real-world scientific applications for Epigenomics, Cybershake, and Montage using varying task sizes. In comparison with DVFS, F-NSPSO performs better.

One of the major challenges in the Internet of Things (IoT)-Cloud scenario is uploading and replicating data across multiple cloud data centers. The number and location of replicas can be determined adaptively, avoiding this problem. Despite the fact that data replication ensures reliability and availability, many copies of each data will require more storage space. This problem can be overcome by replicating the files a minimal number of times. Saranya and Ramesh (2023) studied IoT-Cloud data replication and scheduling using a hybrid fuzzy-CSO algorithm. It finds the best replication locations using Cat Swarm Optimization (CSO). The authors presented a data collection network based on Fuzzy-CSO algorithm's common framework for IoT-Cloud. The fuzzy-CSO algorithm optimizes replication locations. Data replication is scheduled at selected replication points by a task scheduler. The cloud is used for uploading uncompromised data once it has been isolated. Data can be requested using the request-response method. Reports can be generated using the collected data that day or average reports can be requested based on collected data. Fuzzy logic models consider fault occurrence probability, energy costs, and storage capacity when determining how many replicas to use. Fuzzy logic decision returns the optimal number of replicas. It transfers data faster, responds faster, and maximizes bandwidth utilization compared to existing algorithms.

Since workflow scheduling often occurs in uncertain environments, most studies avoid rigorous conditions without fluctuations. A workflow fuzzy scheduling algorithm (WFSA) is presented by Ye et al. (2022). By using the fuzzy sorting strategy, WFSA ranked tasks according to their processing time using triangular fuzzy numbers (TFNs). The fuzzy deadline assignment method is developed using partial critical paths (PCPs) of workflows that are used to decompose workflow deadlines into task sub-deadlines. It presents a workflow scheduling strategy based on heuristics that is cost-driven and meets task deadlines. In comparison with other benchmark solutions, the algorithm reduces workflow execution costs effectively.

Table 8 summarizes the cloud environment proposals and analyzes them. Table 9 summarizes cloud scheduling algorithms. Table 10 compares cloud scheduling algorithms based on QoS parameters.

5.2 Fuzzy-based scheduling algorithms for fog

Ali et al. (2021) presented a task scheduling algorithm for fog-cloud computing that uses fuzzy logic. Assigning tasks to appropriate processing modules creates a haze layer that operates on heterogeneous resources. Additionally, other factors (such as time and size of data) are considered. In the haze layer, custom real-time tasks can be executed to improve IoT application performance. To determine the best place for processing a task, the fuzzy decision algorithm considers resource requirements, time constraints, maximum fog layer resources, cloud-to-fog latency, and maximum fog layer resources. A task cannot be carried out on the fog layer, so it is passed to the cloud. Resources are normalized instead of being calculated and calculated. A Fuzzy Logic Based Decision Algorithm determines whether to process a task in the cloud or in the fog based on the task's resource utilization, deadline, and network latency. When a task is assigned to a fog layer, it is added to the fog queue FQ. The fog queue is ordered according to the deadlines of the tasks. Thus, more tasks will be completed within deadlines by completing urgent tasks with hard deadlines first. The proposed algorithm is faster in construction, has a lower latency rate, and has a shorter rotation time than other algorithms.

Benblidia et al. (2019) proposed a ranking-based task scheduling system based on user preferences and fog node characteristics. Nodes are ranked according to their satisfaction with fuzzy quantified propositions. A major contribution to the paper is the application of fuzzy logic to task scheduling, and the refinement of scoring with two metrics Least Satisfactory Proportion (LSP) and Greatest Satisfactory Proportion (GSP). In the fog network, low-power terminal devices handle requests from high-performance users. A fog node receives requests through a wireless network (e.g., LoRa, WiFi, or Bluetooth). Fog nodes distribute requests via task schedulers. In addition to analyzing data summaries, the cloud also sends new application rules to fog nodes, which implement the rules. There are five criteria for serving users: distance, price, latency, bandwidth, and reliability. Fuzzy membership functions are generated based on users' preferences. It is based on the user's preferences and the fog node's restrictions on sending requests to the user that a fog node is selected. In the experiments, the proposed algorithm achieved satisfactory levels of user satisfaction while balancing implementation latency and power consumption.

By combining fuzzy-constraints with multi-agent systems in the fog-cloud environment, Marwa et al. (2023) have proposed a fuzzy-cone approach called (Fuzzy-Cone). Multi-agent systems use fuzzy inference to model all possible scenarios, which facilitates decision-making. The workflow scheduling problem is modeled using a Fuzzy Constraint Satisfaction Problem (FCSP). In a win–win situation, the client agent represents the client, while the supplier agent represents the supplier. Each agent models a set of fuzzy constraints based on its negotiations by making offers or counter-offers. It represents the imprecise preferences of the approach entities by using predefined fuzzy constraints that ensure compliance with all restrictions. Compilation time and cost of the workflow scheduling solution are optimized. Scheduling workflows is optimized by reducing compilation time and increasing costs.

Vemireddy and Rout (2021) introduced the Fuzzy Reinforcement Learning algorithm (FRL). Whenever task deadlines and resource availability limit mobile fog nodes, they offload tasks using fuzzy reinforcement learning. Using Vehicular Fog Computing (VFC) frameworks and Fuzzy Reinforcement Learning (FRL), an energy-efficient task allocation is achieved for fog-breaker vehicles. It is possible to accelerate algorithm learning through fuzzy logic. Vehicle weights are calculated by fuzzy systems based on factors such as processing rate and distance. Each system state is associated with a set of RL actions. Greedy analysis is used until the agent determines the most advantageous actions. A fog vehicle represents a state while a time slot represents an action. Service time and energy are also taken into account when calculating the reward for each RSU. Vehicles cannot be directly identified by the RL agent in a dynamic situation. As a result of its training, it reacts to the weights of vehicles as actions. Reinforcement learning can be used by policy designers to determine which fog vehicle set is most appropriate for their policy. It is important to maintain a balance between the RSU's service time and energy consumption in order to maximize long-term rewards. Based on a comparison with other algorithms, the FRL algorithm uses 46.73 percent less energy and responds 15.38 percent faster.

In fog computing, Wu et al. (2021) proposed an evolutionary fuzzy scheduler to allocate resources to multi-objective applications. With the help of Estimation of Distribution Algorithm (EDA) applications, the fuzzy offloading strategy is determined and optimized. A probability model, P, is developed first, and then individuals are sampled using P. Results are evaluated next. Whenever elite individuals are identified, P is updated accordingly. The updated P value is then used to sample new individuals until the stopping criteria are met. By clustering applications, system resources are efficiently utilized. A number of contributions have been made, including (1) fuzzy logic-based methods of assigning tasks to different layers (2) fuzzy number approaches to modeling and data transfer (3) and performance improvement in fuzzy logic-based partitioning with local operators. They used the nearness degree to compare two fuzzy sets. The proposed algorithm delivers robust performance for Pareto sets according to simulation studies.

Shukla et al. (2020) proposed a method for scheduling multi-objective tasks that is energy-efficient while using interval type-2 fuzzy timing constraints. Embedded Real-Time Systems (RTES) must determine the speed at which tasks are completed in order to be efficient. Energy-efficient scheduling is necessary in these systems. Input parameters are either data- or expert-driven. The proposed method proposes two fuzzy type-2 fuzzy membership function algorithms and two fuzzy type-2 fuzzy scheduling limitation algorithms. Two conflicting objectives must be resolved when scheduling embedded real-time systems: energy efficiency and timeliness. It is possible to model uncertain timing constraints in RTESs by using IT2 FID (interval type-2 fuzzy input data). Energy consumption should be minimized in order to achieve the first objective. It is essential to schedule all tasks in advance in order to accomplish the second objective. Cost functions are used to model each objective. Fuzzy type-2 uncertainty improved efficiency and robustness in RTES.

The Hosseini et al. (2022) algorithm used Priority Queues, Fuzzy Analysis, and Hierarchical Processes in determining routes and scheduling. The first step in weighting criteria is to determine their relationship. Each criteria set is weighted in order to produce a priority list. A top-down priority queue is used to prioritize tasks. This queue should be simulated as a maximum heap queue. Prioritizing tasks assigns resources according to priority. The criteria will also be used to prioritize new tasks. Once the tasks have been assigned, they are executed. It is first determined how long a task will take and how much energy it will consume. Experimental results indicated that the proposed algorithm optimizes service level, waiting time, and number of scheduled tasks on the MFC side.

Rai et al. (2021) described a three-tiered architecture for vehicular fog computing that uses a Road Side Unit (RSU) to coordinate mobile devices and fog vehicles. A chain of vehicles is created and updated periodically as part of the schedule constraint. A fuzzy logic-based task allocation strategy is developed to maximize community value. Experimental results indicated that the proposed algorithm outperforms other existing approaches.

According to Table 11, we can find a summary of the proposals in the fog environment and an analysis of them. Table 12 summarizes fog scheduling algorithms. Table 13 compares fog scheduling algorithms based on QoS parameters.

5.3 Fuzzy-based scheduling algorithms for grid

Zhou et al. (2009) studied Ant Colony Optimization (ACO) and Fuzzy Reputation Aggregation (FR) algorithms to improve scheduling decisions. The proposed algorithm integrates node properties and reputation values, and fuzzifies these properties using fuzzy logic. It is possible to calculate local trust scores and global reputation using fuzzy logic inference rules. Along with three fuzzy variables assigned coarsely to attributes (good, ordinary, poor), five output variables are assigned coarsely to attributes (very good, good, ordinary, poor, very poor). This algorithm is faster when historical and node information is taken into account.

Moura et al. (2018) proposed Int-fGrid to address uncertainty in network environments using Fuzzy Type-2 logic. BoT (Bag-of-Tasks) is assigned to machines according to their execution time. Int-fGrid receives both a BoT and a Current State of Grid Resources as inputs. Int-fGrid determines which machines are available for use based on their current states. Each machine in the system is measured for Communication Costs (CC) and Computation Power (CP), and the data is then adapted to a Standard Scale. The next step is to move on to the Type 2 Fuzzy Module, which includes fuzzy logic fuzzing, inference, and defuzzing. Priority lists will be created based on the analysis of the available machines' priorities. It also verifies that some machines still remain unanalyzed. Priority lists for all the available machines are available, so all the machines must be analyzed before a priority list can be generated. Scheduler Decision Maker maps tasks according to the available machines based on the priorities list. Machines with higher priority receive tasks more efficiently. Tasks are assigned to machines based on their priority. SimGrid simulations showed that the proposed algorithm controlled the extra traffic and intrusions created in the network.

Vahdat-Nejad et al. (2007) presented several fuzzy algorithms to schedule global tasks in multi-clusters and grids. Fuzzy logic matches available resources with job specifications based on job specifications. There are two layers of scheduling: global and local. A global scheduler assigns tasks to clusters depending on their computation and communication requirements. Tasks are evaluated using a two-degree matching system. The suitability of a task depends on the number and level of low-load nodes in a cluster and the bandwidth available to the job. Cluster nodes receive tasks from local schedulers. Schedulers assign jobs to clusters when they arrive. Each cluster is assigned two weights (between zero and one). According to cluster weight 1, jobs require low-load CPUs and a large number of tasks. In addition, cluster weight 2 takes into account job and cluster communication requirements. The scheduler assigns the job to the cluster with the highest priority. Simulation results showed that a low completion time was achieved thanks to the proposed algorithm that considers network traffic during scheduling.

In grid computing, Rong and Zhigang (2005) made use of the Fuzzy Applicability Algorithm (FAA) to schedule meta-tasks. The problem was divided into a number of smaller tasks without communication between them. It is necessary to determine the attributes of resources before scheduling them. A site's communication and storage resources must be adequate before tasks can be executed. To select the maximum number within this set, the FAA selects the most significant number. Therefore, the biggest task determines the tasks and resources. Each time a resource is changed by the scheduler, the solution is output. Task scheduling continues until no more tasks need to be scheduled. In addition to the estimated cost, the practical cost and the estimated execution time are taken into account. Each task is optimized by maximizing the weighted average fuzzy applicability. Even though FAA is the cheapest over the long run, it is more expensive than Max–Min (Mala et al. 2021) and Fast Greedy (Wang et al. 2018).

Salimi et al. (2012) proposed a method to schedule independent tasks in a market-based grid with load balancing based on NSGA II and fuzzy mutations. Parallel distributed computer systems can solve independent task problems using NSGA II and fuzzy adaptive jump operators. Fuzzy mutation prevents mutations from disrupting solutions. The Pareto process can be accelerated and simplified since various reduce mutation rates and prevent disruptions in the solution process. It is important to reduce the time needed to complete a job, load machines, and keep prices low. NSGA II with Fuzzy Adaptive Mutation Operator can be used to solve problem relating to independent task assignment in distributed computing systems. When scheduling tasks in a grid, there are three approaches. This problem is investigated using NSGA II, followed by MOPSO. Then, both NSGA II and fuzzy logic were used to assign two objective functions, Price and Makespan. The optimization of task scheduling is based on three objective function optimization strategies: Price, Makespan, and Load Balancing. Variance between Costs and Gene Variance are inputs for NSGA II with fuzzy mutations, which express differences between bits' values, so that individuals are not equal. It is possible to generate Pareto optimal solutions more quickly and precisely by using this algorithm.

García-Galán et al. (2012) implemented a fuzzy scheduling algorithm based on swarm intelligence. In Fuzzy Rule-Based Systems (FRBSs), knowledge is driven by fuzzy rules and is highly adaptable to changes in the environment. This system illustrates how variables interact within it using Fuzzy-Logic. Swarm Intelligence can also acquire knowledge using FRBS-based scheduling. Variables are used to describe both the current state of machines and the results obtained so far during scheduling. Performance evolution over time is dependent on their actual resource conditions in each domain as well as their actual performance conditions. Scheduling based on fuzzy rules is easier when PSO is adapted to them. Simulated results showed that a fuzzy-meta scheduler outperformed six classical queue-based and task scheduling methods used today. Moreover, 62.6% of fitness training results are generated by the fuzzy scheduler.

Prado et al. (2012) proposed multi-objective knowledge acquisition for the provision of QoS at the grid scheduling level. The Knowledge Acquisition with a Swarm Intelligence Approach (KASIA) implements a new learning strategy using swarm intelligence rules. The performance of an efficient meta-scheduler network is evaluated using well-known GAs and Pareto optimization theory. Because FRBS incorporate both network expert knowledge and grid state uncertainty, they provide efficient solutions. Fuzzy systems consider factors such as tardiness, deadline evaluation, delayed jobs, and lead times when making decisions. As a first step, an objective function or fitness must be improved. The search space is initialized with NP particles distributed randomly. A particle's position is updated through iterations in order to improve objective function f. Particles also lean to maintain momentum in order to achieve optimal results. Additionally, the social component refers to the movement of the particle towards its best position. At the end, the location of a particle changes. Multi-objective fuzzy rule-based scheduling improves network resource optimization.

Salimi et al. (2014) used a fuzzy operator in a network environment in order to improve the performance and quality of the non-dominated sorting genetic algorithm (NSGA-II). Scheduling jobs in a grid environment takes into account three objectives: load balancing, makespan, and price. NSGA-II calculates fuzzy crossover and mutation functions using variance between individuals' costs, variance between resources' frequencies, and variance between genes' values. Searching for Pareto-optimal solutions is simplified with fuzzy operators, which reduce computation and simplify decision-making. The fuzzy method can be used to improve the Pareto front by utilizing adaptive mutation rates along it. Therefore, a fuzzy system is fed both fitness variations of individuals and resource frequency variations. Load balancing is indirectly improved through an additional input combined with a makespan objective. Fuzzy systems determine crossover rates in populations. The proposed approach balances the workload with less repetition to achieve the best results.

Liu et al. (2010) developed an algorithm for scheduling computational grid jobs. Fuzzy matrices represent particle positions and velocity in PSO. A new job is assigned to a machine based on the Longest Job on the Fastest Node (LJFN) method and the Shortest Job on the Fastest Node (SJFN). Scheduling array elements are tagged as “1” in the position matrix, and the remaining numbers are tagged as “0”. It is necessary to process both the scheduling array and the makespan (solution) in order to calculate the scheduling solution. The PSO algorithm is compared with simulation annealing (SA) and GA. Results showed a faster convergence rate and an efficient scheduling process.

In a virtual organization, Prado et al. (2010) presented an application-level genetic fuzzy rule-based scheduling system for scheduling computationally intensive BoT applications. Tasks are arranged and scheduled in a fuzzy manner. AI-based scheduling systems involve several systems: tasks gathering, tasks sorting, and AI-based scheduling. It is initially necessary to identify the points in time, referred to as mapping events, when tasks are incorporated into the objective set of tasks q(t) based on their arrival at the meta-scheduler. A time interval \(\Delta t\) is specified after each mapping event at t. Thus, both tasks that were not scheduled in q′(t) as well as those that arrived after the batch-mode strategy was applied will be included in waiting queue q(t). Moreover, the task sorting system reorders tasks before resource domain (RD) allocation, forming the queue q″(t) properly. During task assignment, the scheduler uses a fuzzy system that takes several factors into account (e.g., amount of memory, amount of CPU time, number of idle MIPS, number of free processors, free memory, size of executed tasks, and number of executed tasks). This strategy improves response times by nearly 11% over other well-established scheduling methods. In addition, it improves response times and balances load while effectively managing users' priorities.

Saleh (2012) proposed an efficient grid scheduling strategy using a fuzzy matchmaking approach. A key focus of researchers is to minimize turnaround times and solve scheduling haste. The first step is to model ready-to-run tasks. Moreover, a fuzzy-based matchmaking method is used for assigning tasks based on CPU power, memory requirements, and storage requirements. In this proposal, grid-scheduling is divided into three tiers, namely: Grid Clients Tier (GCT), Grid Scheduler Tier (GST), and Grid Workers Tier (GWT). GCT represents grid users through their client machines, which they can use to accomplish their tasks. It provides an infrastructure to simulate a virtual supercomputer with GWT. The system aggregates the computational power of geographically dispersed workers. GST is an important component of the proposed scheduling frameworks. Scheduling decisions are made by GST. GST also collects task results and sends them to the task creator. Simulations revealed that the proposed scheduling strategy resulted in a reduction in failure rates and makespan.

Rajan (2020) proposed the FPDSA algorithm for scheduling jobs in grid computing. Using fuzzy time delays, tasks are assigned based on processing speed. For this reason, they estimated the expected execution time using a fuzzy number. By subtracting the deadline from the fuzzy expected computation time, a reverse allocation produces a difference. The resource inventory of a task is determined by its requested properties and the number of processors it requires. The appropriate task should be selected based on the availability and processing speed of scheduled tasks. The dispensation velocity of this system is maximum, so it becomes a priority as soon as it is implemented. It was compared with the Improvised Deadlines Scheduling Algorithm (IDSA), Earliest Deadlines First (EDF), and Prioritized Based Deadlines Scheduling Algorithm (PBDA) according to the average actual execution rate (AAE), non-delayed jobs, and delayed jobs. FPDSA was found to be effective in reducing the number of delayed jobs.

According to Table 14, we can find a summary of the proposals in the grid environment and an analysis of them. Table 15 summarizes grid scheduling algorithms. Table 16 compares grid scheduling algorithms based on QoS parameters.

6 Analysis and comparisons

6.1 Taxonomy based on publishers

The selected articles come from ScienceDirect, IEEE, and Springer publishers. Figure 20 compares the classification of papers by three publishers. As shown in Fig. 20, ScienceDirect accounts for 34% of all papers, IEEE for 40%, and Springer Link for 26%.

6.2 Taxonomy based on the year of publication

Figure 21 shows the papers categorized by publication year. Among the articles published from 2005 to 2023, most appeared in 2012, 2020, 2021, and 2022.

The majority of articles in the grid environment were published in 2012 (see Fig. 22). Cloud and fog papers generally focus on the years 2019–2023. Cloud-based publications dominate in 2020, whereas fog-based publications dominate in 2021.

6.3 Comparison based on the type of scheduling method

Figure 23 illustrates the static and dynamic modes of algorithms. Most presented algorithms (about 91%) use dynamic methods, while only 9% use static methods. Table 17 shows that dynamic algorithms are used more often than static algorithms.

6.4 Comparison based on evaluation environment

Figure 24 shows that a majority of articles used simulation tools to evaluate their algorithms, whereas only one used real-world environments. There have been no papers that use both simulation tools and real-world environments together. Furthermore, two articles did not specify whether a real environment or a simulation tool was used. There are many simulation tools that are used in Cloud, Fog, and Grid computing in order to evaluate new scheduling algorithms. CloudSim (Hicham and Chaker 2016) is a popular simulation tool. Simulators like GridSim (Buyya and Murshed 2003) and iFogSim (Gupta et al. 2017) are also available. Figure 25 indicates the distribution of algorithms using a simulation framework. CloudSim simulator is used by 22% of algorithms, Matlab and GridSim simulators by 16% and 14%.

Cloud computing involves simulating the infrastructure and services provided by the cloud using CloudSim. It is entirely programmable in Java and developed by CLOUDS Lab. Modeling and simulating cloud computing environments is used before software development to replicate tests and results. Figure 26 shows that the CloudSim offers several benefits.

CloudSim can perform a variety of simulations and models, as shown in Fig. 27.

Engineers and scientists can analyze and design systems and products with Matlab. Simulink and Model-Based Design facilitate the deployment and analysis of enterprise applications. GridSim simplifies and automates modeling and evaluating scheduling algorithms. Parallel and Distributed Computing (PDC) simulates interactions between users, applications, resources, and resource brokers. Resource brokers enable the aggregation of heterogeneous resources. The management of these resources is done by different types of shared schedulers, including shared memory, multiprocessors, and distributed memory schedulers. The heterogeneity of a resource depends on its configuration, availability, and processing capabilities. Scheduling algorithms or policies assign tasks to resources based on system or user objectives.

Figure 28 illustrates which simulators were more and less used in each environment by articles. According to this study, GridSim simulation tools are used more commonly in grid computing environments than other tools. CloudSim is the most common tool for simulating cloud environments. Simulations in fog environments are usually performed using Matlab.

6.5 Classification based on various meta-heuristic algorithms

Figure 29 shows that most algorithms use meta-heuristics along with fuzzy systems when solving scheduling problems. Some articles used traditional algorithms, while others used computational methods. Heuristic algorithms are used by 23% of fuzzy-based scheduling algorithms, while meta-heuristic algorithms are used by 60%. Heuristics are used based on the problem at hand. It is possible to maximize their specific benefits since they are typically customized.

In contrast, a meta-heuristic is an independent technique. In order to explore the solution space more thoroughly, they may temporarily depreciate the solution. Although meta-heuristics are problem-independent, they have to be fine-tuned to fit the particular problem. Around 16% of the algorithms discussed in this paper use metaheuristic algorithms. Figure 30 illustrates the percentages of different meta-heuristic algorithms. About 34% of algorithms use the well-known meta-heuristic algorithm PSO, and about 34% use GA.

6.6 Analysis of QoS parameters

Figures 31, 32 and 33 illustrate the percentage of different QoS parameters considered by different scheduling algorithms in cloud, fog, and grid environments. Most articles consider not only the makespan time, but also the response time and execution time. Furthermore, most articles ignore important factors like security, resource allocation, and rotation time. Makespan time is considered in clouds around 46%, in fog around 25%, and in grid around 50%.

The makespan time and cost in a cloud environment (see Fig. 31) are primarily considered, while rotation time and latency rate are not. Based on Fig. 32, fog environment algorithms are designed to minimize execution time, task migration, response time, and energy consumption. Figure 33 shows that grid environment articles placed more emphasis on makespan time than other factors, but not on resource allocation.

Nowadays, distributed systems need to be secured. Information must remain private regardless of whether it is stored or sent over a network. Many of the advantages of cloud/fog computing may be incompatible with traditional security models and controls. Distributed environments can present security challenges, especially for businesses that own their own infrastructure and computational resources and sell services to consumers. It is crucial to ensure information confidentiality, integrity, and authenticity when using real-time IoT applications (such as smart healthcare and smart homes). Security service in distributed environment is extremely complex compared with that in a traditional data center (Pourzandi et al. 2005). An attack surface with many compute resources in a cloud can be large. It is important to incorporate security modeling into task scheduling, performance, and trust QoS requirements to improve cloud service quality. Fuzzy logic is a straightforward method for modelling such parameters. In real-time system scheduling research, scheduling constraints are typically assumed. Generally, these parameters suggest using fuzzy logic to determine how to order the requests in such situations so that the system can be used more efficiently and to minimize the chances of requests getting missed or delayed. As a result, scheduling parameters will be considered fuzzy variables. Swamy and Mandapati (2018a, b) defined input variables for fuzzy systems as job length, VM memory, security level, and energy consumption. With this algorithm, customers will be able to receive their orders on time. Ali and Sridevi (2023) proposed a novel task scheduling algorithm that utilizes fuzzy logic to optimize the distribution of tasks between the fog and cloud layers. The algorithm selects the right processing unit based on the requirements of the task (e.g., computation, storage, bandwidth, security). Sujana et al. (2018) presented a fuzzy-based decision model. As a result, the most secure VM can be chosen for the workflow tasks. Integrity, confidentiality, and authentication are taken into account when ensuring security.

6.7 Comparison based on the fuzzy logic usage

Table 18 presents the use of fuzzy logic in the reviewed papers. There are many practical and commercial problems that can be solved using fuzzy logic systems. Furthermore, it can be applied to product and control machines for consumers, to managing engineering uncertainty, and to providing acceptable but not exact reasoning. Further, fuzzy logic is advantageous for a various of problems because of its unique features (see Table 19).

There has been considerable research interest in fuzzy logic to improve computing environments in many fields, including resource management, fog security, and attack detection and prediction. A brief overview of different uses of fuzzy logic in scheduling studies is provided here (see Fig. 34).

-

IoT data generated by fog cannot be processed locally due to the limited resources available. Thus, it will be essential to choose between the fog layer and the cloud layer. Further, the heterogeneity of fog resources and the varying requirements of tasks influence task execution efficiency. Several task scheduling algorithms use fuzzy logic to optimize task distribution between cloud and fog layers, taking into account different task characteristics, including the task length, deadline, security demand, and resource constraints.

-

It is necessary for the service provider to balance conflicting objectives such as decreasing overall operation costs and minimizing resource wastage. The fitness function in some scheduling algorithms is based on fuzzy systems for choosing the most appropriate node to serve the tasks of the users. This step mainly uses fuzzy theory to assign the best node to tasks based on task characteristics (e.g., task length and file size) and resource characteristics (e.g., CPU speed and storage).

-