Errors in GHCN metadata inventories show stations off by as much as 300 kilometers

Guest post by Steven Mosher

In the debate over the accuracy of the global temperature nothing is more evident than errors in the location data for stations in the GHCN inventory. That inventory is the primary source for all the temperature series.

One question is “do these mistakes make a difference?” If one believes as I do that the record is largely correct, then it’s obvious that these mistakes cannot make a huge difference. If one believes, as some do, that the record is flawed, then it’s obvious that these mistakes could be part of the problem. Up until know that is where these two sides of the debate stand.

Believers convinced that the small mistakes cannot make a difference; and dis-believers holding that these mistakes could in fact contribute to the bias in the record. Before I get to the question of whether or not these mistakes make a difference, I need to establish the mistakes, show how some of them originate, correct them where I can and then do some simple evaluations of the impact of the mistakes. This is not a simple process. Throughout this process I think we can say two things that are unassailable:

1. the mistakes are real. 2. we simply don’t know if they make a difference. Some believe they cannot (but they haven’t demonstrated that) and some believe they will (but they haven’t demonstrated that). The demonstration of either position requires real work. Up to now no one has done this work.

This matters primarily because to settle the matter of UHI stations must be categorized as urban or rural. That entails collecing some information about the character of the station, say its population or the characteristics of the land surface. So, location matters. Consider Nightlights which Hansen2010 uses to categorize stations into urban and rural. That determination is made by looking up the value of a pixel in an image. If it is bright, the site is urban. If it’s dark (mis-located in the ocean) the site is rural.

In the GHCN metadata the station may be reported at location xyz.xyN yzx.yxE. In reality it can be many miles from this location. That means the nightlights lookup or ANY georeferenced data ( impervious surfaces, gridded population, land cover) may be wrong. One of my readers alerted me to a project to correct the data. That project can be found here. That resource led to other resources including a 2 year long project to correct the data for all weather stations. Its a huge repository. That led to the WMO documents one of the putative sources for GHCN. This source also has errors. Luckily the WMO has asked all member nations to report more accurate data back in 2009. That process has yet to be completed and when it is done we should have data that is reported down to the arc second. Until then we are stuck trying to reconcile various sources.

The first problem to solve is the loss of precision problem. The WMO has reports that are down to the arc minute. It’s clear that when GHCN uses this data and transforms it into decimal degrees that they round and truncate. These truncations, on occasion, will move a station. I’ve documented that by examining the original WMO documents and the GHCN documents. In other cases it hard to see the exact error in GHCN, but they clearly dont track with WMO. First the WMO coordinates for WMO 60355 and then the GHCN coordinates:

WMO: 60355 SKIKDA 36 53N 06 54E [36.8833333, 6.9000]

GHCN: 10160355000 SKIKDA 36.93 6.95

GHCN places the station in the ocean. WMO places it on land as seen above.

To start correcting these locations I started working through the various sources. In this post I will start the work by correcting the GHCN inventory using WMO information as the basis. Aware, of course that WMO may have it own issue. The task is complicated by the lack of any GHCN documents showing how they used WMO documents. In the first step I’ve done this. I compared the GHCN inventory with the WMO inventory and looked at those records where GHCN and WMO have the same station number and station name. That is difficult in itself because of the way GHCN truncates names to fit a data field. It’s also complicated by the issue of re spelling, multiple names for each site and the issue of GHCN Imod flags and WMO station index sub numbers.

Here is what we find. If we start with the 7200 stations in the GHCN inventory and use the WMO identifier to look up the same stations in the WMO official inventory we get roughly 2500 matches. Here are the matching rules I used.

1. the WMO number must be the same

2. The GHCN name must match the WMO name (or alternate names match).

3. The GHCNID must not have any Imod variants. (no multiple stations per WMO)

4. The WMO station must not have any sub index variants. (107 WMO numbers have subindexes)

That’s a bit hard to explain but in short I try to match the stations that are unique in GHCN with those that are unique in the WMO records. Here is what a sample record looks like.WMO positions are translated from degrees and minutes to decimal degrees and the full precision is retained. You can check that against GHCN rounding. As we saw in previous posts slight movements in stations can move them from Bright to dark and from dark to bright pixels.

63401001000 JAN MAYEN 70.93 -8.67 1001 JAN MAYEN 70.93333 -8.666667

63401008000 SVALBARD LUFT 78.25 15.47 1008 SVALBARD AP 78.25000 15.466667

63401025000 TROMO/SKATTO 69.50 19.00 1025 TROMSO/LANGNES 69.68333 18.916667

63401028000 BJORNOYA 74.52 19.02 1028 BJORNOYA 74.51667 19.016667

63401049000 ALTA LUFTHAVN 69.98 23.37 1049 ALTA LUFTHAVN 69.98333 23.366667

You also see some of the name matching difficulties where the two records have the same WMO and slightly different names. If we collate all differences on lat and lon in matching stations we get the following:

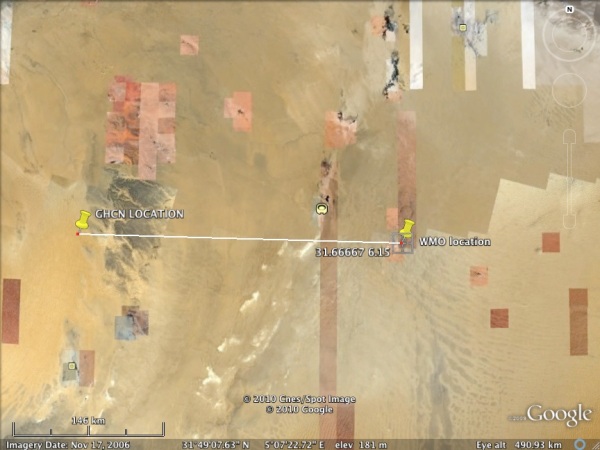

And when we check the worst record we find the following

WMO: 60581 HASSI-MESSAOUD 31.66667 6.15

GHCN: 10160581000 HASSI-MESSOUD 31.7 2.9

GHCN has the station at longitude [smm] 2.9. According to GHCN the station is an airport:

The location in the WMO file

And the difference is roughly 300km.WMO is more correct than GHCN. GHCN is off by 300km

An old picture of the approach (weather station is to the left)

Now, why does this matter. Giss uses GHCN inventories to get Nightlights. Nightlights uses the location information to determine if the pixel is dark (rural) or bright (urban)

NASA thinks this site is dark. They think it is pitch dark. Of course they are looking 300km away from the real site. From the inventory used in H2010.

10160581000 HASSI-MESSOUD 31.70 2.90 398 630R HOT DESERT A 0

“1. the mistakes are real. 2. we simply don’t know if they make a difference. Some believe they cannot (but they haven’t demonstrated that) and some believe they will (but they haven’t demonstrated that).”

While this is true — as a pure logical matter — the position that the record is accurate bears the burden of showing that it is the case and that the record can be trusted. The proponent of a theory, or a methodology, or a dataset must demonstrate a meaningful level of accuracy before anyone else should be required to even take it seriously. I can’t simply assert that my dataset is good, and then it is up to the other side to pick it apart.

Having seen poor siting, poor data entry (sometimes even switching the sign of the entry), infilling over hundreds of kilometers, adjustments to data, and, now, sites in the wrong locations, I don’t personally feel any need to take the record seriously until the mess gets cleaned up.

Of course, we really need to ask: accurate in which sense? Is the record “accurate” enough in broad strokes and at a very rough resolution to show some level of warming over the past century? Yeah, probably. Is it accurate enough for us to know, to within tenths of a degree what has happened across the globe, whether the warming is meaningful, whether it has been global, regional, isolated, etc.? Is it accurate enough for us to identify any kind of meaningful trend from the noise? It is in these areas that, if I may be so bold, many of us have serious doubts. The record has certainly never been shown to have any such skill, and each revelation of problems with the record only adds to the unease. The whole thing is pretty spooky. 🙂

“If we start with the 7200 stations in the GHCN inventory and use the WMO identifier to look up the same stations in the WMO official inventory we get roughly 2500 matches.”

This kind of “slop” certainly does not engender confidence. With 65% of the stations not being located exactly where the analysts think they are and the analysts categorizing and adjusting data based on location, who could trust the results?

Skeptics like me have noticed that “slop” which produces results skewed toward”warming” is accepted and goes unquestioned and uncorrected within the “true believing” scientific community. Conversely, “slop” that produces results contrary to the theory of AGW is intensely questioned within that community and corrected without delay. That mind-set is guaranteed to produce a false record tending toward a preconceived conclusion.

Fascinating, Steven! Not sure of the ultimate significance, but another interesting piece of the pie.

“1. the mistakes are real”

Ok, with all the money spent on climatology, why is it that the raw data is not more carefully collected?

E.g. in the UK 1700 ‘scientists’ signed a petition supporting the conclusions of the IPCC. How come these same ‘scientists’ could not keep the temperature records straight? I rather think at this point we have more ‘scientists’ than thermometers.

Perhaps its as much about scientific credibility as it is about whether “these mistakes make a difference?”

If the so called scientists who use the data these stations produce are either unaware of or unconcerned that station positioning is up to 300km off then I would question the credibility of these scientists. After adding in all the other temp recording/adjustment problems we hear about on WUWT and these so called climate scientists lose all credibility. They have simply become a complete waste of money, time and space.

In the end, this all deals somewhat on philosophy. I’ve been deeply concerned (albeit not from an alarmist side) about AGW since 1988. I believe that AGW has long broken past being a scientific issue (if it ever was one) and into a religious, political issue. I want to be careful here in relationship to bringing up my medical issues as a parallel to the issues regarding AGW. But those issues have taught me things about the lack of ‘certainty’ in science. This lack of certainty is, in the end, a wondrous thing. I wish to dwell upon this further on this fine website.

If you start from the basis of one partiular location in your community, then expand that to cover other locations as well, then it is difficult if not impossible to find that there has been any warming at all since records began.

If you start to find evidence of some scattered warming, then look for evidence of UHI.

Match rainfall with temperature.

Rising temperature with flat rainfall is a sure pointer to UHI.

A simple check would be to determine the trend at the 2500 stations that match and compare it to the entire sample. Statistically a population that large is enough to show the trend.

If there is not difference between the 2500 and the 7200 then the catalog error is insignificant. If there is a difference, then it needs investigation.

I don’t think it matter that much as there is enough data to support that the Earth is warmer now than it has for most of the last few hundred years. That it is warmer now also doesn’t matter as that kind of variation is natural.

John Kehr

The Inconvenient Skeptic

Mosh, take a look at MMS record for Blue Hill station, MA.

You will see several different locations listed. I spoke to the curator up there (very longtime old dude). He insisted there had been only one station move since 1905 and that was decades ago and the move was less than 20 feet.

They have the full suite of equipment up there, but they take the USHCN data straight from a big old Hazen screen, the same one that’s been there over 100 years. They also list the equipment as MMTS since 1990 (the curator said they never even hooked that junk up). They also have two hygros, only one of which was listed (but no longer is listed). They only mention the SRG although they have all sorts of new and spiffy equipment (including one of those spanking new ASOS-type rain gauge jobs) not listed in their equipment.

The Hazen screen is never mentioned as part of equipment (though a more recent CRS is noted).

So you can count on NCDC not only making big errors regarding siting, but they also appear prone to error on equipment and even what sort of sensor is being used to collect their data.

Once current locations are verified, how will the correctness of previous locations be dealt with? Presumably the same type of errors have always existed with the data set.

“Consider Nightlights which Hansen2010 uses to categorize stations into urban and rural. That determination is made by looking up the value of a pixel in an image. If it bright, the site is urban. If its dark (mis-located in the ocean) the site is rural.”

_____________________________________________________________

Correct me if I’m wrong, but even with increasing population and urbanisation, the over-whelming majority of the Earth’s surface would still be categorised as dark.

It is therefore logical to assume that if a station is randomly mis-located, then there is a far greater chance that it will be wrongly categorised as a rural station when it should be urban than as an urban station when it should be rural.

Wrongly categorising an urban station as rural would mean that no allowance would be made for any UHI effect.

Lo and behold, we have the potential, at least, for bias in the record.

That seemed too easy, so if I am missing something blindingly obvious, I apologise in advance…….

Ok, with all the money spent on climatology, why is it that the raw data is not more carefully collected?

Okay, remember the old Soviet-era Russian joke about the bridge and the watchman?

Thank you Steven — the difference with Dr Trenberths “Scientists almost always have to massage their data, exercising judgment about what might be defective and best disregarded” couldn’t be more striking.

If the data is potentially flawed, a scientist looks to quantify the size and impact of the errors and correct them if possible. Failing that, identifying the impact of the flaws on the conclusions and fully disclosing these limitations is acceptable.

Dr. Trenberths comments read far more the way sales and marketing spreadsheets are “massaged” to give a good business case — a job I used to have.

Natural philosophy has a long and honourable tradition of welcoming the contributions of the skilled or expert amateur: where indeed would our astronomical friends be without their army of amateur stargazers watching the heavens.

And indeed as Anthony Watts’ surface station project has shown the sheer manpower the amateurs can mobilise is awesome.

You would think climate scientists might welcome these resources and interact with them as the astronomers do.

Not a bit. Yet as the amateurs and some professional scientists have slowly come together in the blogosphere they are increasingly challenging the scientific integrity of Cimatology from almost every aspect. You yourself Mr. Mosher are addressing a serious issue with the quality of the observations used for surface temperature sets.

Although your approach is valid and offers important insights it will not welcomed by the professionals. Personally I find your observations fascinating. But no doubt your painstaking work will be derided, ridiculed and personal slurs made about you.

Strange is it not? Yet stranger still that the blogosphere has managed to mobilise a large number of well informed largely unpaid persons to challenge the scientific establishment of climatology on almost every aspect of their discipline: as they carry it out to their profit, their professional advancement: and above all else to their entire satisfaction. For none in their tight little world will gainsay them.

Yet despite all attempts to ignore, suppress and vilify it this groundroots approach has gained traction. And slowly, very slowly, is shifting the debate from rhetoric, polemic and derision, not to mention obstruction, to serious scientific investigation.

Which is to be welcomed.

But it is very strange nevertheless.

Amazing thing t’internet. Interesting times Mr. Mosher, interesting times indeed.

Kindest Regards

I’m a long-distance hiker and I’ll tell you with no uncertainty that 10 km can, and very often does, make a very large difference in local conditions. A 300 km error makes the data AND any calculations based on that data simply ridiculous.

I”m also a systems engineer with over 50 years of experience with instrumentation of little things like nuclear reactors, amphibious vehicles, scientific spacecraft, etc. For most of that time I was the science operations engineer for such minor programs as Landsat, UARS and HST. And I have never in all those years seen either scientists or engineers be as unconscionably sloppy as has become abundantly and obviously common in the “climate community”. If I’d been that sloppy at any time in my 50+ year career, I’d have been fired – and rightfully so.

Tell me again – why do we pay these guys the big bucks? IMO, what they seem to term “science” wouldn’t get a passing grade in a middle school science fair.

Hmmm – I guess it’s obvious that I’m not happy with these people. They give science, engineering — and mathematics, a black eye.

Place thermometer. Note location. Take periodic readings.

I can see how that could pose problems.

On a related note, are there any statistics how many of these scientists have done themselves a mischief through being unable to distinguish between depressions in the earth and certain areas of their anatomy?

I hope that this is not a dumb question, but has anyone done any work looking at the combination of uncertainties involved in the sequence of

a. recording the temperature at all these places – with possibilities for error/UHI/equipment failures/calibration errors/misreads etc

b. working out where the damned things are

c. collecting the data

d. ‘adjusting’ the data

e. sticking it all into some ginormous computer program (preferably not by

) and computing a daily/weekly/monthly/annual average

and all the other processing steps involved.

My ‘feeling’ is that given all the uncertainties involved, we might be able to detect really large changes in temperature (5 degrees or so over a century). But to accurately find a change of less than 1 degree seems to stretch the point a bit. Even with a faultless sequence of processing from data capture to overall answer, that would seem to be pretty unlikely.

But we continue to see more and more evidence that we do not have faultless data collection nor processing…and there is a slight odour of sharp practice as well.

Can we really have the level of faith in the temperature record that we are asked to believe? Or is the signal too small and the noise too great?

I remember a meteorologist in Honolulu laughingly pointing out to a reporter trumpeting the record temperature that his instruments a mere half mile away would read significantly lower. Because he was stuck at a frickin airport. That the ‘record’ was bogus. Of course the local news outlet left off the explanation and went with the ‘record’.Even tho the meteorologist gave a very concise explanation of the urban heat effect on near ground instrumentation.

Let everyone be clear. It is warmer in Hawaii than it was 25 years ago. Is it unprecedented? Absolutely not.

“If we start with the 7200 stations in the GHCN inventory and use the WMO identifier to look up the same stations in the WMO official inventory we get roughly 2500 matches.

This kind of “slop” certainly does not engender confidence. With 65% of the stations not being located exactly where the analysts think they are and the analysts categorizing and adjusting data based on location, who could trust the results?”

You have to careful. Far more than 2500 match. It depends what you mean by match.

For example. GHCN will have a single WMO with up to 11 Imods for that one wmo

Thats 11 different locations. Well some of these are historical names, some of these are different locations, I havent even started to untangle that. so of the 7200, about 4000 have imods, so I didnt even start to check those yet.

I tried to find the clearest case of where the GHCN document came from the wmo document. The WMO had to be the same. There was no IMOD, and the station Names “matched.” even here the station names dont match exactly. So where does the GHCN data come from? WMO is supposed to be the source

WMO: 60581 HASSI-MESSAOUD 31.66667 6.15

GHCN: 10160581000 HASSI-MESSOUD 31.7 2.9

Frank Lee Meidere says:

October 31, 2010 at 9:59 pm (Edit)

Place thermometer. Note location. Take periodic readings.

I can see how that could pose problems.

#

stations move, airports move, cities become ghost towns. they change names. Countries get renamed. Russian spelling varies. Some people write MT, other write

mount. some people write Saint Louis, other write St. Louis. unravelling the history of all the changes is tough work

Although your approach is valid and offers important insights it will not welcomed by the professionals. Personally I find your observations fascinating. But no doubt your painstaking work will be derided, ridiculed and personal slurs made about you.”

doesnt bug me. I learned how to vectorize a function! yippi. which is to say, I really dont do this to gain anybodies approval. It doesnt earn me any money. It’s peaceful to work on problems like this.

Anything is possible says:

October 31, 2010 at 8:58 pm (Edit)

“Consider Nightlights which Hansen2010 uses to categorize stations into urban and rural. That determination is made by looking up the value of a pixel in an image. If it bright, the site is urban. If its dark (mis-located in the ocean) the site is rural.”

_____________________________________________________________

Correct me if I’m wrong, but even with increasing population and urbanisation, the over-whelming majority of the Earth’s surface would still be categorised as dark.

It is therefore logical to assume that if a station is randomly mis-located, then there is a far greater chance that it will be wrongly categorised as a rural station when it should be urban than as an urban station when it should be rural.

@@@@@@@@@@@@@@@

You might think that. I’d rather prove it. one way or the other.

but proof is hard. conjecture is easy.

I recently reread the paper Contiguous US Temperature Trends Using NCDC Raw and Adjusted Data for One-Per-State Rural and Urban Station Sets by Dr. Edward R. Long. In that paper, Dr. Long shows that when a least squares linear approximation is applied to a climate station data set, the rural stations seem to have a significantly more shallow slope than urban stations. In particular, the rural stations have a slope of .13C/century and .79C/century for urban stations.

It seems to me that all you have to do is do a least squares linear approximation to all station data sets and look for ones that have a shallow slope. Then examine the meta data for these sites to figure out if they are rural or not. The reverse can be done for urban sites.

Given that most of North America (actually most of the planet) is rural, plotting the rural data should be sufficient to identify any global warming trend. The raw data rural slope reported in the paper above is quite shallow (.13C/century.) It would be interesting to see if a more comprehensive set of rural stations yield a similar slope. If global warming is as pervasive as many in the scientific community claim, even selecting rural stations with low slopes should give a higher aggregate slope that .13C/century.

Jim Owen says:

October 31, 2010 at 9:49 pm (Edit)

I’m a long-distance hiker and I’ll tell you with no uncertainty that 10 km can, and very often does, make a very large difference in local conditions. A 300 km error makes the data AND any calculations based on that data simply ridiculous.

$$$$$$$$$$$

be careful. All you know is the the station is in the inventory. It might not even have enough years of temperature data. That is why I caution people.I am Only looking at the location data here.

slow down. step by step.

1. GHCN has an inventory of station metadata.

2. we are going to try to figure out if it is correct, Or if we can fix it.

The discrepancy in the first picture could well be due to sea level rises caused by global ………….( whatever is todays name) It’s so obvious!!!!

No so sure that is the weather station to the left of the runway. might be the VOR/DME if I look at the airfield diagrams.

At the risk of climbing on a bandwagon here, i also don’t understand how there can be any questions left? Their conclusions are almost always long term projections, which means that the DATA as well as the methods of handling data must be faultless.

When you start with faulty data, extrapolating any conclusions from that is pointless? And the further away you are projecting, the larger the error. So climate warnings for a century from now are worthless, total imaginary garbage.

And on these they are basing “emergency” carbon emissions laws?

I’m not a scientist in any way, but even a layman can spot a con artist at work.

I’m a biological scientist interested in genetics generally, and the genetics of human disease specifically. I can see a broadly comparable situation in my world to what appears to be happening here. I’m a data generator – I do experiments and generate my own raw data to look at different diseases and their genetics. However, increasingly in science we’re being encouraged to collaborate and in my field, that means collaborating with medically qualified staff. To be clear, I hvae nothing against medically qualified people, but it must be said that there is a clear view amongst a lot of people that medically qualified people are the best people to do “medical” research are the medics. This is, of course, nonsense – properly-trained scientists are equally capable and in many cases, better equipped to do so. Anyway, repeatedly in my experience, medical researchers want genetic data and want to analyse and publish that data without ever wanting to understand how it was generated and what the issues and concerns might be with the underlying data (ie. it’s accuracy and reliability etc etc). I’ve also had many cases where I’ve raised concerns about the quality of underlying data, only to be told not to worry about it. In one case I’ve asked to have my name taken off a publication where I felt this resulted in flaws in the data interpretation, which was the catalyst for my concerns to be taken seriously.

Its just an example to say there are other places where people place a great deal of “faith” in the quality of the data they’re relying on to say something significant, without actually having the tiniest clue about its validity or reliability.

Makes one wonder if the meaning of ‘robust’ needs to be amended.

The WMO coordinates for Skikda are at an airport, as well as Weather underground’s are using the roughly the same lat/long.

http://www.maplandia.com/algeria/airports/skikda-airport/

GHCN database likely has the wrong coordinates, but if they are using WMO data, it should have three zeros at the end.

Variable Definitions:

ID: 11 digit identifier, digits 1-3=Country Code, digits 4-8 represent

the WMO id if the station is a WMO station. It is a WMO station if

digits 9-11=”000″.

LATITUDE: latitude of station in decimal degrees

LONGITUDE: longitude of station in decimal degrees

STELEV: is the station elevation in meteres

NAME: station name

ftp://ftp.ncdc.noaa.gov/pub/data/ghcn/v3/README

As for HASSI-MESSAOUD, it may be someone with a”fat” finger. Even MESSAOUD is spelled wrong in GHCN.

John Kerr

I don’t think it matter that much as there is enough data to support that the Earth is warmer now than it has for most of the last few hundred years.

This is another of those rather silly arm waving statements. When the science is bad and the measurement techniques are bad and the data collection are bad how can you make a statement like that?

Steve Mosher.

I just wonder what this mis-location problem has when GISS do their 1200 km aggregation technique. They are 25% out from there centre point point.

Questions of the veracity of the temperature record is what initially made me suspicious that many climate scientists are using data extracted from noise to make a case for AGW. The more the topic of the actual collection of data is discussed, the more I become convinced that the data collection methadology is more than a little inconsistent; the final theoretical product seems (to me, at least) to be rather like a symphony played by an orchestra from a score that was accidentally shredded and then reassembled by a committee of statisticians who had no idea of what the symphony sounded like in the first place and whose final product is nothing more than a collection of random noise.

My initial doubts about methadology have not been assuaged!

Due to the ‘Nightlights’ light-level nature of city centers being rather gaussian, it’s not hard to fall off the bell curve when it comes to rating whether a station needs UHI correction or not. And since most rural stations have been abandoned in the record, uncorrected warming bias is a gimme.

It would be ramdomly poor for any given station to hit ‘pixel on’, and thus, the vast majority of stations are undercorrected.

This problem is highly embarassing and reeks of poor accuracy.

It says that the system was never obtained the precision which is necessary to determine the Global Temperature on which to accurately assess how much the Earth has warmed. It overshot the mark.

The error compounds the uncertainty of exactly how much warming C02 is responsible for.

Steven, there is a saying among educators who happen to believe that with proper scientific rigor, students can catch up to grade level. Here is the saying:

Belief trumps data. You can have pages and pages of data showing how your students are making “catch up” gains. Doesn’t matter. Most people believe that success in school is an individual trait that cannot be adjusted. Therefore it is the student’s responsibility to learn, not the teacher’s.

I think one of the reasons why this is the way it is is because people don’t want to start over learning something new. If the new way works against their beliefs, it makes all their years of work wrong and subject to “do over” status. So we stick to our beliefs and refuse to budge out of fear of being wrong.

I’m wondering if everybody reading this story knows what metadata is?

I’m curious about “nightlights”. From your description it appears that “bright” is based on visible light spectrum? Lotsa lights = urban and no lights = rural. Seems to me that’s an over simplification. No lights on your average granary, but there’s heat coming off it and plenty of it. Septic field same. A snow fence can mess with temperature for miles. Seems to me that looking at a map for light sources is pretty primitive when one is trying to measure fractions of a degree over a century. You would need to do a physical survey of each station for nearby changes and keep it updated regularily. Of course then GISS would just extend the temps out 1200 km anyway.

And CO2 would still be logarithmic.

You’re not even trying Mosher, I found one that was several thousand kilometres out.

OK, Evan, I give up. What’s a Hazen screen?

Steven

It is NDB not VOR/DME. VOR/DME is located slightly to the left (it is not visible on this picture).

If you look for Met station check space between taxiways C and D.

Or about 50m North of NDB.

Tomasz Kornaszewski

…Skikda has an Algerian “Edmund Fitzgerald”, apparently:

1989 shipping disaster

The city has a commercial harbour with a gas and oil terminal. On 15th February 1989 the Dutch tanker Maassluis was anchored just outside the port, waiting to dock the next day at the terminal, when extreme weather broke out. The ship’s anchors didn’t hold and the ship smashed on the pier-head of the port. The disaster killed 27 of the 29 people on board.[4] [WIKIPEDIA] … Don’t be 29 aboard…[Edmund Fitzgerald

had 29 too, but no survivor…]

Steven:

This is a question that also came to me when you found the wandering temperature station. Could one or more of these misplaced stations cause them to wander from one 5°x5° Grid Box into another?

@Eric Anderson (first commenter) I agree with Eric Anderson. I am a different Eric Anderson. Which I found kind of spooky when I saw the first comment on this post. But clearly we Eric Andersons are a sound-minded lot. 🙂

Whenever I get into arguments with people about global warming, I urge them to go over to the surfacestations.org site and just peruse the pictures of the siting of the temperature sensing apparatus. Then look at the “corrections” to raw data. A few pictures are worth a thousand words. But I doubt many people actually take up my challenge.

OK, I was wrong

What you see on picture is NOT a NDB. And it is NOT a VOR/DME. According to . NDB is slightly to the South.

According to: it is GP/DME (antenna for ILS system).

Tomasz Kornaszewski

A good first cut would be to check the station location on Google Earth or similar. This would catch rural stations in the middle of a Walmart parking lot or urban stations adjcent to a farm house.

What is apparent is that global temperature records have unresloved QA/QC problems and until they are resolved any conclusions drawn from the data should be filed under fiction.

One would have thought that as a matter of fundamental pre-requisite when compiling the data to be used for the global data set that each and every station would have been audited by way of individual physical inspection ascertaining, amongst other matters, its location, all siting issues, station changes, dates when any station changes were made and precisely what these changes consisted of (eg., location changes, equipment changes etc) equipment used, how data is collected and recorded, when equipment was last calibrated.

It never ceases to amaze me the extent of potential errors that have been allowed to creep into the global data set through simple sloppiness and how those that advance the AGW theory are blind to the reality caused by this, namely that there can be no confidence in the data extracted through homogenisation from this data source and that it is incapable of isolating the signal from the noise.

As other have said, without an accurate data set, no reliable projections can be made and to make any meaningful extrapolation of data trends into the future is impossible given the poor quality (and potential unreliability) of the under lying data.

One needs to start a fresh. In my opinion, we should only be looking at sea temperatures and satellite collected temperatures, or sea temperatures and wholly unadjusted rural data sets. All other data sets should be disregarded.

Climate is mainly driven by the sea (which cover approx 70% of the Earth and the volume of which is a giant storage reservoir) . Thus sea temperature data is the most important single issue.

As regards land temperature, one only needs to look at unadjusted raw rural data from Canada, USA, UK, Sweden or Norway, Russia, Germany or France, and China to have a very good idea what has happened to the Northern hemisphere. As regards the Southern Hemisphere, there is little quality data but my understanding is that unadjusted rural data from New Zealand and possibly Australia suggests little warming. There is no reason to suspect that that data is not typical of the Southern Hemishpere.

I consider that the poles should be looked at seperately since these are their own micro climates and the effect of climate change taking place at the poles raises very different issues to climate change occurring at more temperate latitudes.

Wayne Gramlich says:

October 31, 2010 at 11:51 pm

I recently reread the paper Contiguous US Temperature Trends Using NCDC Raw and Adjusted Data for One-Per-State Rural and Urban Station Sets by Dr. Edward R. Long.

Anthony, this paper should be posted if it has not been already. It is very damning – definitely “worse than I thought”. It also raises again the question of when your paper will be published.

If you can get your fixed permenant location correct why should anyone expect you to be able to get correctly measure multiple temperature records daily ?

garbage in …

From Long’s paper referenced above, taking urban plus rural residential land area as 5% of total USA land area, we get an area weighted warming of .19 degrees C for the USA, using the raw data, ie about 1/4 of the claimed warming. We also have the recent warm peak slightly lower than the late 1930s warm peak. Long’s analysis has at least one other advantage – it is not distorted by “the march of the thermometers” toward the equator that has been analyzed by Chiefio.

It is at least possible that real global warming in the 20th century was no more than 0.2 degrees C.

I should have said “toward lower latitudes and elevations”.

I apologize for messing up links. I forgot about closing tags.

Tomasz Kornaszewski

Well if mistakes don’t matter; and don’t affect the results; then we could stop taking the data all together; since the data doesn’t matter; and we could simply make up the results; and save a ton of money.

And in any case; why are we wasting so much monety to gather data; when the data doesn’t really affect anything anyhow.

Now, why does this matter. Giss uses GHCN inventories to get Nightlights. Nightlights uses the location information to determine if the pixel is dark (rural) or bright (urban).

Steven,

This issue will be of greater importance than just citing mistakes. GHCN uses the erroneously determined “rural” locations to adjust the “urban” locations for data gap infill within cells. This homogenizing may be additive to the original error. So if you need further additional peaceful activities, follow the data infilling.

It seems that every time temp data is examined in detail , by country or individual stations, errors are found. How can one have any confidence in a supposed rise of 1c ( or less) over a hundred years with equipment that often has errors larger than this, with unrevealed adjustments, UHI, in-filling ,extrapolation , recording mistakes etc.

Every long standing unadjusted record for the US that has been posted seems to show very small trends if any.

When you are lying or eating fish you have to be caring…

They are NOT LYING, ethimologically META-DATA means (Greek) BEYOND -DATA.

Steven Mosher says:

“It is therefore logical to assume that if a station is randomly mis-located, then there is a far greater chance that it will be wrongly categorised as a rural station when it should be urban than as an urban station when it should be rural.

@@@@@@@@@@@@@@@

You might think that. I’d rather prove it. one way or the other.

but proof is hard. conjecture is easy.”

I have looked into the Swedish GHCN stations (19). Only 2 have zero nightlights (Jokkmokk and Films kyrkby). These two are also the most dispaced stations in the set (about 20 and 30 kilometers), moving Jokkmokk from a minor airport to the middle of a lake and Films kyrkby from a village to the middle of a large uninhabited forest. Films kyrkby by the the way also has a spurious name (“Kreuzburg”) and a completely fictitious altitude (620 meters ASL instead of 50 meters).

The other stations’ position errors vary from a few hundred meters up to about 10 kilometers, with an average of 1-2 kilometers. The other metadata (airport/non airport, town population, vegetation type, altitude) are also in error for about half the stations.

While these errors are not enough to affect the large scale climate, in my opinion it is quite useless to try and correlate the GHCN data with any kind of geodata at a higher resolution than about 0.1-0.2 degrees (10-20 km).

Steven Mosher says:

October 31, 2010 at 11:49 pm

“You might think that. I’d rather prove it. one way or the other.

but proof is hard. conjecture is easy.”

_____________________________________________________________

Thanks for your response Stephen, and the best of luck to you with your investigations.

I still can’t help that everything is bass-ackwards however.

It is the proponents of CAGW, not you, who are proposing that the world should make major changes to its’ economy and the way that energy is generated, largely on the evidence provided by this database.

Surely the emphasis should be on THEM to PROVE that it as accurate as it is possible to make it…….

Wayne:

“I recently reread the paper Contiguous US Temperature Trends Using NCDC Raw and Adjusted Data for One-Per-State Rural and Urban Station Sets by Dr. Edward R. Long. In that paper, Dr. Long shows that when a least squares linear approximation is applied to a climate station data set, the rural stations seem to have a significantly more shallow slope than urban stations. In particular, the rural stations have a slope of .13C/century and .79C/century for urban stations.”

I Read that piece and was not very impressed with the methodology. From the data selection to the rural criteria to the math. I’ll go into detail if you like, but really have other things to do.

“It seems to me that all you have to do is do a least squares linear approximation to all station data sets and look for ones that have a shallow slope. Then examine the meta data for these sites to figure out if they are rural or not. The reverse can be done for urban sites.”

Thought about that. Did something similar. But the hazard is confirmation bias.

“Given that most of North America (actually most of the planet) is rural, plotting the rural data should be sufficient to identify any global warming trend. The raw data rural slope reported in the paper above is quite shallow (.13C/century.) It would be interesting to see if a more comprehensive set of rural stations yield a similar slope. If global warming is as pervasive as many in the scientific community claim, even selecting rural stations with low slopes should give a higher aggregate slope that .13C/century.”

Actually that is something I’ll aim at. But you wont find the .13C/century slope you expect. Aint gunna happen. First problem is using raw data. The raw data has errors and it all needs to be put on the same footing. (things like time of obsevation)

best you can hope for is 10% adjustment to the current numbers.

Gordon Ford says:

November 1, 2010 at 8:03 am (Edit)

A good first cut would be to check the station location on Google Earth or similar. This would catch rural stations in the middle of a Walmart parking lot or urban stations adjcent to a farm house.

What is apparent is that global temperature records have unresloved QA/QC problems and until they are resolved any conclusions drawn from the data should be filed under fiction.

%%%%%%%%%

I’ve posted google tours of all the locations so people can do this. 7280 stations. Not a one man job.

David Jones says:

November 1, 2010 at 6:19 am (Edit)

You’re not even trying Mosher, I found one that was several thousand kilometres out.

$$$$$$$

you have to be careful there are some where GHCN uses the historical data and WMO only publishes current data. In any case since v3 is in beta we can hopefully get them to fix the issue. Other people ( climate science types) are working this issue so hopefully the problem will get fixed.

early results, say it doesnt make a difference. I stress early. Since R is interactive I can often just take a quick look if the prelim work gives me any kind of indication.

Indication is this. the rural/urban count doesnt change much. early indication.

Murray,

the march of thermometers makes no difference. That’s been shown repeatedly.

In the coming months I suspect it will be shown again with a huge database of work.

Pamela

‘So we stick to our beliefs and refuse to budge out of fear of being wrong.”

yup. but that goes for doubters as well.

I, too, have some issues with Dr. Long’s methodology, that I why I’d like somebody else to take a crack at plotting rural data with a more robust station selection criteria (something like the category 1 & 2 stations identified in surfacestations.org.)

I do not understand the last sentence.

Actually, I’d just like to see the rural curve. I accept that time of observation adjustments are appropriate. I’m more skeptical of the code that attempts to do data infilling. Ultimately, I’m much more interested in the slopes of the curve from 1900-1930 vs the slope from 1960-2000+; the overall slope is much less interesting, since appears to be essentially a curve fit of a 1-1/2 cycle oscillation.

tty.

Thanks, checking all this stuff by hand is hard work. Lets look at one of yours

64502142000 JOKKMOKK 66.63 19.65 264 313R -9HIFOLA-9x-9WOODED TUNDRA A 0

WMO=02142

Imod = 000

and there are no other stations with that WMO. Sometimes GHCN will use the SAME WMO but indicate a different location using the IMOD flag. whcih is why I eliminated those case from my FIRST pass through the data.

Now lets see what WMO says:

02151 0 JOKKMOKK FPL 66 29N 20 10E 275

See the problem? Actually WMO has no entry for 02142

Now the GHCN lat indicates a station at 66.63 or 66’38”

WMO has

02141 0 TJAKAAPE 66 18N 19 12E 582

02161 0 NATTAVAARA 66 45N 20 55E

Now, That is not the end of the searching.

There is yet another master list that solves this mystery

SWE SE Sweden – Jokkmokk AFB SEaaESNJ ESNJ 2142 m ESNJ A ICA09 2142 21420 66.6333 19.65 C 264 264 Europe/Stockholm

is that confusing? Well, it tells me that GHCN gets the data for this from ICA09 documents, not from WMO.

Simply, the GHCN appear to get data from WMO and other sources. So, to audit them properly I have to figure out where they got the data from. My master list tells me exactly where the data comes from and the quality of the information. That should allow me to correct GHCN, add location precision, and account for data that is not precise. But it’s a huge mountain of checking. automating the process is a nightmare.

tty:

“While these errors are not enough to affect the large scale climate, in my opinion it is quite useless to try and correlate the GHCN data with any kind of geodata at a higher resolution than about 0.1-0.2 degrees (10-20 km).”

There are many stations where there are no lights for 60km in any direction.

So my approach will be this.

1. Correct GHCN as best I can given the other documents I have. Especially the rounding errors.

2. Characterize the distribution of the errors ( 95% within 5km… FOR EXAMPLE)

3. reclassify the stations using a bounding box approach. Not just the pixel, but surrounding pixels as well. All the code to do that is done and tested. The key is this

A. characterizing the average error in station location

B. looking at the pixel location error (1-2km)

C. adjusting my bounding box accordingly.

SO, in H2010 a station us rural if the pixel at its location is dark.

In my approach I will correct the stations as much as feasible and look at all the surounding pixels.. dark for 10km around the “location” of the station.

make sense?

Then I can screen them further with population data going back to 1850 for every 10km*10km grid.

That’s the plan.

1. correct the stations

2. characterize the error

3. screen using that error knowledge

George E. Smith says:

November 1, 2010 at 9:55 am (Edit)

Well if mistakes don’t matter; and don’t affect the results; then we could stop taking the data all together; since the data doesn’t matter; and we could simply make up the results; and save a ton of money.

$$$$$$$$$$

if I have 100 dollars in the bank and I write 5 checks

25.45

23.19

25.43

19.01

20.56.

And I do my accounting by rounding up numbers always, I can figure that

26+24+26+20+21 will tell me that I am overdrawn. Now, 117.0 is the wrong answer. But to my question “am I overdrawn?” this “mistake” makes no difference. The mistake doesnt make me a millionaire. I’m still broke. So, actually some mistakes make no difference.. TO the question being asked and the person asking the question.

Tomasz Kornaszewski says:

i thought it might the GP/DME as well but didnt have the patience to look through all the online tools. so I just gave some links to some of the stuff I found.

The lack of quality control in the databases impugns the credibility and competence of the people who are in charge of them. If they can’t get the easy stuff right and can’t build systems properly, why should we expect that their secret adjustment processes are sound?

Nightlights? I once flew into Riga, Latvia, in the middle of the night and the city was almost totally dark — fortunately they turned on the runway lights just before we landed! Before we make sweeping assumptions we should find out some “ground truth” as the geologists say. Now we know why there are complaints that “they don’t teach geography any more”.

Steven Mosher says:

October 31, 2010 at 11:38 pm

” unravelling the history of all the changes is tough work”

Actually it is conjecture, and not data, but inference disguised as data or “metadata”.

Mosh,

Good work. It’s good to see you picking up what Peter O’Neill started. I did note it on your blog but too up to my eyes these days…

While that may explain problems with determining the reliability of stations, or trying to find the record from any particular station, it doesn’t explain why the actual locations are wrong. These are scientists. Their currency is data. Not taking care of the data is akin to bankers mislaying the money (or more accurately, their financial transactions).

The important fact is not whether the globe warming. The important fact is that those who propose to turn our society upside down on the basis of supposed warming show a striking lack of interest in collecting and examining the data needed to determine whether it is warming or not.

richard verney says: “…In my opinion, we should only be looking at sea temperatures and satellite collected temperatures, or sea temperatures and wholly unadjusted rural data sets. All other data sets should be disregarded. Climate is mainly driven by the sea (which cover approx 70% of the Earth and the volume of which is a giant storage reservoir) . Thus sea temperature data is the most important single issue….”

The oceans have a thermal mass over 1000 times greater than the atmosphere. Trying to tease a small warming (or cooling) signal out of atmospheric data is like trying to measure changes in the weight of a bull by how hard he snorts.

Verity Jones says:

November 1, 2010 at 4:32 pm

Mosh,

Good work. It’s good to see you picking up what Peter O’Neill started. I did note it on your blog but too up to my eyes these days…

$$$$$$$$$

Peter, does fantastic original work. Ron Broberg also did some great work that highlighted the stations in the ocean problem. sorting this out is a big job.

I cannot actually believe they round lat/lon off to 2 decimal places! That’s such an embarrassingly basic error to make, anyone that’s ever worked with plotting points on a google map would know that within 30 seconds

Simon says:

November 2, 2010 at 6:33 am

I cannot actually believe they round lat/lon off to 2 decimal places! That’s such an embarrassingly basic error to make, anyone that’s ever worked with plotting points on a google map would know that within 30 seconds

#########

yep! the other thing I wanted to show was what this does when you do look-ups into a grid that has a 1/120th of a degree accuracy.

While I stopped short of proving it mathematically it seems that if you take an orginal location in degrees,minutes/seconds and transform it to decimal and round, then your datapoints are highly likely to land on the grid BOUNDARIES and on the grid corners. horrible stuff to track down.

of the 7280 stations well over half will land on a nightlights pixel boundary.

All because of rounding.

The boundary pixel problem means that it is next to impossible to verify against GISS .

Wow, yet another example of how poor the data used by climate scientist to try and measure a 0.? temperature anomaly is. The same type of problem came out strongly in the CRU Climategate scandal, thanks to the Harry reedme file. No wonder Jones was willing to destroy the data, rather than allowing other scientists to try and reproduce his departments work!

This is not the way science should be conducted, and there are plenty of other examples of ‘bending the truth’ for political gain. It would appear the modern scientific methods used by the IPCC climate cable can be split into two forms – the inductive and the deductive.

INDUCTIVE:

* formulate hypothesis

* apply for grant

* perform experiments or gather data to test hypothesis

* alter data to fit hypothesis

* publish

DEDUCTIVE:

* formulate hypothesis

* apply for grant

* perform experiments or gather data to test hypothesis

* revise hypothesis to fit data

* backdate revised hypothesis

* publish

(Thanks to Tom Weller’s book – SCIENCE MADE STUPID)

Tenuc.

The problems with the location data in GCHN predate the whole Jones issue and are unrelated.

When the data was created there was no thought of using it to geolocate. The problem is being worked by NOAA. its hard tedious work